Introduction Big Data Machine Learning o Definition:perform machine learning from big data. o Role:key for big data Ultimate goal of big data processing is to mine value from data. Machine learning provides fundamental theory and computational techniques for big data mining and analysis. 日卡三4元,互Q0 Li (http://cs.nju.edu.cn/lwj) Big Leaming CS.NJU 6/115

Introduction Big Data Machine Learning Definition: perform machine learning from big data. Role: key for big data Ultimate goal of big data processing is to mine value from data. Machine learning provides fundamental theory and computational techniques for big data mining and analysis. Li (http://cs.nju.edu.cn/lwj) Big Learning CS, NJU 6 / 115

Introduction Challenge oStorage:memory and disk oComputation:CPU o Communication:network 日卡三4元,互Q0 Li (http://cs.nju.edu.cn/lvj) Big Leamning CS.NJU 7 /115

Introduction Challenge Storage: memory and disk Computation: CPU Communication: network Li (http://cs.nju.edu.cn/lwj) Big Learning CS, NJU 7 / 115

Introduction Our Contribution 。Learning to hash(哈希学习):memory/disk/cpu/communication 。Distributed learning(分布式学习)memory/disk/cpu; but increase communication cost ●Stochastic learning(随机学习):memory/disk/cpu 日卡三4元,互Q0 Li (http://cs.nju.edu.cn/lvj) Big Learning CS.NJU 8/115

Introduction Our Contribution Learning to hash (MFÆS): memory/disk/cpu/communication Distributed learning (©Ÿ™ÆS): memory/disk/cpu; but increase communication cost Stochastic learning (ëÅÆS): memory/disk/cpu Li (http://cs.nju.edu.cn/lwj) Big Learning CS, NJU 8 / 115

Learning to Hash Outline Introduction ②Learning to Hash o Isotropic Hashing Scalable Graph Hashing with Feature Transformation o Supervised Hashing with Latent Factor Models o Column Sampling based Discrete Supervised Hashing o Deep Supervised Hashing with Pairwise Labels Supervised Multimodal Hashing with SCM Multiple-Bit Quantization Distributed Learning Coupled Group Lasso for Web-Scale CTR Prediction Distributed Power-Law Graph Computing Stochastic Learning Fast Asynchronous Parallel Stochastic Gradient Descent Distributed Stochastic ADMM for Matrix Factorization Conclusion 口卡得,三4元互Q0 Li (http://cs.nju.edu.cn/lvj) Big Leaming CS.NJU 9/115

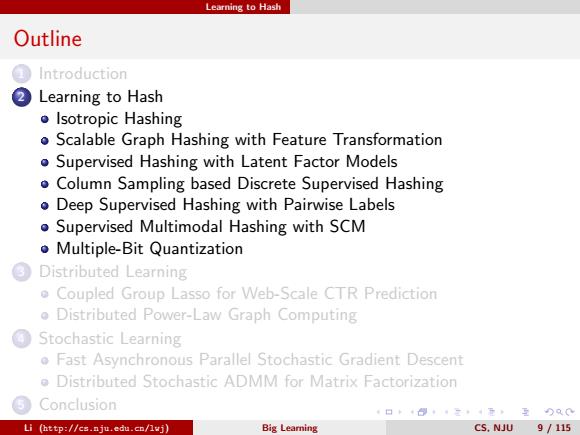

Learning to Hash Outline 1 Introduction 2 Learning to Hash Isotropic Hashing Scalable Graph Hashing with Feature Transformation Supervised Hashing with Latent Factor Models Column Sampling based Discrete Supervised Hashing Deep Supervised Hashing with Pairwise Labels Supervised Multimodal Hashing with SCM Multiple-Bit Quantization 3 Distributed Learning Coupled Group Lasso for Web-Scale CTR Prediction Distributed Power-Law Graph Computing 4 Stochastic Learning Fast Asynchronous Parallel Stochastic Gradient Descent Distributed Stochastic ADMM for Matrix Factorization 5 Conclusion Li (http://cs.nju.edu.cn/lwj) Big Learning CS, NJU 9 / 115

Learning to Hash Nearest Neighbor Search (Retrieval) Given a query point g,return the points closest(similar)to g in the database (e.g.,image retrieval). o Underlying many machine learning,data mining,information retrieval problems Challenge in Big Data Applications: o Curse of dimensionality Storage cost ●Query speed 日卡三4元,互)Q0 Li (http://cs.nju.edu.cn/lwj) Big Leaming CS.NJU 10/115

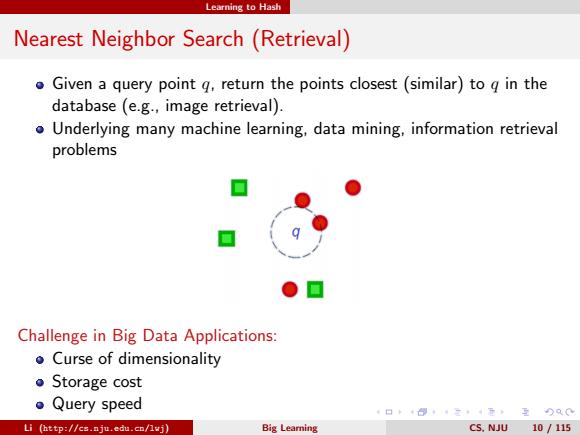

Learning to Hash Nearest Neighbor Search (Retrieval) Given a query point q, return the points closest (similar) to q in the database (e.g., image retrieval). Underlying many machine learning, data mining, information retrieval problems Challenge in Big Data Applications: Curse of dimensionality Storage cost Query speed Li (http://cs.nju.edu.cn/lwj) Big Learning CS, NJU 10 / 115