5288B5287B5286B5249B5230B5229B5200B5148B4916B4722B4721B4720B4719B4594B4593B4569B4568B4567B45 66B4565B4564B4427B4426B4410B4409B4345B4218B4217B4216B4215B3636B3635B3469B3468B3465B3455B3454 B3453B3449B3221B3220B3219B3214B3059B3058B3057B2535B2534B2190B2189B2188B2-14 BChapter2.Thread 17 Safety Listing 2.5.Servlet that Attempts to Cache its Last Result without Adequate Atomicity.Don't Do this. @NotThreadsafe public class UnsafecachingFactorizer implements servlet f private final AtomicReference<BigInteger>lastNumber new AtomicReference<BigInteger>(; private final AtomicReference<BigInteger[]>lastFactors new AtomicReference<BigInteger[]>; public void service(servletRequest req,servletResponse resp){ BigInteger i extractFromRequest(req); if (i.equals(lastNumber.get())) encodeIntoResponse(resp, astFactors.get(); else BigInteger[]factors factor(i); lastNumber.set(); lastFactors.set(factors); encodeIntoResponse(resp,factors); Unfortunately,this approach does not work.Even though the atomic references are individually thread-safe, UnsafecachingFactorizer has race conditions that could make it produce the wrong answer. The definition of thread safety requires that invariants be preserved regardless of timing or interleaving of operations in multiple threads.One invariant of unsafecachingFactorizer is that the product of the factors cached in lastFactors equal the value cached in lastNumber;our servlet is correct only if this invariant always holds.When multiple variables participate in an invariant,they are not independent:the value of one constrains the allowed value(s)of the others. Thus when updating one,you must update the others in the same atomic operation. With some unlucky timing,unsafecachingFactorizer can violate this invariant.Using atomic references,we cannot update both lastNumber and lastFactors simultaneously,even though each call to set is atomic;there is still a window of vulnerability when one has been modified and the other has not,and during that time other threads could see that the invariant does not hold.Similarly,the two values cannot be fetched simultaneously:between the time when thread A fetches the two values,thread B could have changed them,and again A may observe that the invariant does not hold. To preserve state consistency,update related state variables in a single atomic operation. 2.3.1.Intrinsic Locks Java provides a built-in locking mechanism for enforcing atomicity:the synchronized block.(There is also another critical aspect to locking and other synchronization mechanismsvisibility -which is covered in Chapter 3.)A synchronized block has two parts:a reference to an object that will serve as the lock,and a block of code to be guarded by that lock.A synchronized method is shorthand for a synchronized block that spans an entire method body,and whose lock is the object on which the method is being invoked.(Static synchronized methods use the class object for the lock.) synchronized (lock){ /Access or modify shared state guarded by lock Every Java object can implicitly act as a lock for purposes of synchronization;these built-in locks are called intrinsic locks or monitor locks.The lock is automatically acquired by the executing thread before entering a synchronized block and automatically released when control exits the synchronized block,whether by the normal control path or by throwing an exception out of the block.The only way to acquire an intrinsic lock is to enter a synchronized block or method guarded by that lock. Intrinsic locks in Java act as mutexes(or mutual exclusion locks),which means that at most one thread may own the lock.When thread A attempts to acquire a lock held by thread B,A must wait,or block,until B releases it.If B never releases the lock,A waits forever

17 5288B5287B5286B5249B5230B5229B5200B5148B4916B4722B4721B4720B4719B4594B4593B4569B4568B4567B45 66B4565B4564B4427B4426B4410B4409B4345B4218B4217B4216B4215B3636B3635B3469B3468B3465B3455B3454 B3453B3449B3221B3220B3219B3214B3059B3058B3057B2535B2534B2190B2189B2188B2Ͳ14BChapter 2. Thread Safety Listing 2.5. Servlet that Attempts to Cache its Last Result without Adequate Atomicity. Don't Do this. @NotThreadSafe public class UnsafeCachingFactorizer implements Servlet { private final AtomicReference<BigInteger> lastNumber = new AtomicReference<BigInteger>(); private final AtomicReference<BigInteger[]> lastFactors = new AtomicReference<BigInteger[]>(); public void service(ServletRequest req, ServletResponse resp) { BigInteger i = extractFromRequest(req); if (i.equals(lastNumber.get())) encodeIntoResponse(resp, lastFactors.get() ); else { BigInteger[] factors = factor(i); lastNumber.set(i); lastFactors.set(factors); encodeIntoResponse(resp, factors); } } } Unfortunately, this approach does not work. Even though the atomic references are individually threadͲsafe, UnsafeCachingFactorizer has race conditions that could make it produce the wrong answer. The definition of thread safety requires that invariants be preserved regardless of timing or interleaving of operations in multiple threads. One invariant of UnsafeCachingFactorizer is that the product of the factors cached in lastFactors equal the value cached in lastNumber; our servlet is correct only if this invariant always holds. When multiple variables participate in an invariant, they are not independent: the value of one constrains the allowed value(s) of the others. Thus when updating one, you must update the others in the same atomic operation. With some unlucky timing, UnsafeCachingFactorizer can violate this invariant. Using atomic references, we cannot update both lastNumber and lastFactors simultaneously, even though each call to set is atomic; there is still a window of vulnerability when one has been modified and the other has not, and during that time other threads could see that the invariant does not hold. Similarly, the two values cannot be fetched simultaneously: between the time when thread A fetches the two values, thread B could have changed them, and again A may observe that the invariant does not hold. To preserve state consistency, update related state variables in a single atomic operation. 2.3.1. Intrinsic Locks Java provides a builtͲin locking mechanism for enforcing atomicity: the synchronized block. (There is also another critical aspect to locking and other synchronization mechanismsvisibility Ͳ which is covered in Chapter 3.) A synchronized block has two parts: a reference to an object that will serve as the lock, and a block of code to be guarded by that lock. A synchronized method is shorthand for a synchronized block that spans an entire method body, and whose lock is the object on which the method is being invoked. (Static synchronized methods use the Class object for the lock.) synchronized (lock) { // Access or modify shared state guarded by lock } Every Java object can implicitly act as a lock for purposes of synchronization; these builtͲin locks are called intrinsic locks or monitor locks. The lock is automatically acquired by the executing thread before entering a synchronized block and automatically released when control exits the synchronized block, whether by the normal control path or by throwing an exception out of the block. The only way to acquire an intrinsic lock is to enter a synchronized block or method guarded by that lock. Intrinsic locks in Java act as mutexes (or mutual exclusion locks), which means that at most one thread may own the lock. When thread A attempts to acquire a lock held by thread B, A must wait, or block, until B releases it. If B never releases the lock, A waits forever.����

18 Java Concurrency In Practice Since only one thread at a time can execute a block of code guarded by a given lock,the synchronized blocks guarded by the same lock execute atomically with respect to one another.In the context of concurrency,atomicity means the same thing as it does in transactional applications-that a group of statements appear to execute as a single,indivisible unit.No thread executing a synchronized block can observe another thread to be in the middle of a synchronized block guarded by the same lock. The machinery of synchronization makes it easy to restore thread safety to the factoring servlet.Listing 2.6 makes the service method synchronized,so only one thread may enter service at a time.synchronizedFactorizer is now thread-safe;however,this approach is fairly extreme,since it inhibits multiple clients from using the factoring servlet simultaneously at all-resulting in unacceptably poor responsiveness.This problem-which is a performance problem, not a thread safety problem-is addressed in Section 2.5. Listing 2.6.Servlet that Caches Last Result,But with Unacceptably Poor Concurrency.Don't Do this. @Threadsafe public class synchronizedFactorizer implements servlet @GuardedBy("this")private BigInteger lastNumber; @GuardedBy("this")private BigInteger[]lastFactors; public synchronized void service(ServletRequest req, servletResponse resp){ BigInteger i=extractFromRequest(req); if (1.equals(lastNumber)) encodeIntoResponse(resp,lastFactors); else BigInteger[]factors factor(i); lastNumber 1; lastFactors factors; encodeIntoResponse(resp,factors); 2.3.2.Reentrancy When a thread requests a lock that is already held by another thread,the requesting thread blocks.But because intrinsic locks are reentrant,if a thread tries to acquire a lock that it already holds,the request succeeds.Reentrancy means that locks are acquired on a per-thread rather than per-invocation basis.7 Reentrancy is implemented by associating with each lock an acquisition count and an owning thread.When the count is zero,the lock is considered unheld.When a thread acquires a previously unheld lock,the JVM records the owner and sets the acquisition count to one.If that same thread acquires the lock again,the count is incremented,and when the owning thread exits the synchronized block,the count is decremented.When the count reaches zero,the lock is released. [7]This differs from the default locking behavior for pthreads(POSIX threads)mutexes,which are granted on a per-invocation basis. Reentrancy facilitates encapsulation of locking behavior,and thus simplifies the development of object-oriented concurrent code.Without reentrant locks,the very natural-looking code in Listing 2.7,in which a subclass overrides a synchronized method and then calls the superclass method,would deadlock.Because the dosomething methods in widget and Loggingwidget are both synchronized,each tries to acquire the lock on the widget before proceeding. But if intrinsic locks were not reentrant,the call to super.dosomething would never be able to acquire the lock because it would be considered already held,and the thread would permanently stall waiting for a lock it can never acquire. Reentrancy saves us from deadlock in situations like this. Listing 2.7.Code that would Deadlock if Intrinsic Locks were Not Reentrant. public class widget public synchronized void dosomething(){ } public class Loggingwidget extends widget public synchronized void dosomething({ System.out.println(tostring()+":calling dosomething"); super.dosomething(); }

18 Java Concurrency In Practice Since only one thread at a time can execute a block of code guarded by a given lock, the synchronized blocks guarded by the same lock execute atomically with respect to one another. In the context of concurrency, atomicity means the same thing as it does in transactional applicationsͲthat a group of statements appear to execute as a single, indivisible unit. No thread executing a synchronized block can observe another thread to be in the middle of a synchronized block guarded by the same lock. The machinery of synchronization makes it easy to restore thread safety to the factoring servlet. Listing 2.6 makes the service method synchronized, so only one thread may enter service at a time. SynchronizedFactorizer is now threadͲsafe; however, this approach is fairly extreme, since it inhibits multiple clients from using the factoring servlet simultaneously at allͲresulting in unacceptably poor responsiveness. This problemͲwhich is a performance problem, not a thread safety problemͲis addressed in Section 2.5. Listing 2.6. Servlet that Caches Last Result, But with Unacceptably Poor Concurrency. Don't Do this. @ThreadSafe public class SynchronizedFactorizer implements Servlet { @GuardedBy("this") private BigInteger lastNumber; @GuardedBy("this") private BigInteger[] lastFactors; public synchronized void service(ServletRequest req, ServletResponse resp) { BigInteger i = extractFromRequest(req); if (i.equals(lastNumber)) encodeIntoResponse(resp, lastFactors); else { BigInteger[] factors = factor(i); lastNumber = i; lastFactors = factors; encodeIntoResponse(resp, factors); } } } 2.3.2. Reentrancy When a thread requests a lock that is already held by another thread, the requesting thread blocks. But because intrinsic locks are reentrant, if a thread tries to acquire a lock that it already holds, the request succeeds. Reentrancy means that locks are acquired on a perͲthread rather than perͲinvocation basis. [7] Reentrancy is implemented by associating with each lock an acquisition count and an owning thread. When the count is zero, the lock is considered unheld. When a thread acquires a previously unheld lock, the JVM records the owner and sets the acquisition count to one. If that same thread acquires the lock again, the count is incremented, and when the owning thread exits the synchronized block, the count is decremented. When the count reaches zero, the lock is released. [7] This differs from the default locking behavior for pthreads (POSIX threads) mutexes, which are granted on a perͲinvocation basis. Reentrancy facilitates encapsulation of locking behavior, and thus simplifies the development of objectͲoriented concurrent code. Without reentrant locks, the very naturalͲlooking code in Listing 2.7, in which a subclass overrides a synchronized method and then calls the superclass method, would deadlock. Because the doSomething methods in Widget and LoggingWidget are both synchronized, each tries to acquire the lock on the Widget before proceeding. But if intrinsic locks were not reentrant, the call to super.doSomething would never be able to acquire the lock because it would be considered already held, and the thread would permanently stall waiting for a lock it can never acquire. Reentrancy saves us from deadlock in situations like this. Listing 2.7. Code that would Deadlock if Intrinsic Locks were Not Reentrant. public class Widget { public synchronized void doSomething() { ... } } public class LoggingWidget extends Widget { public synchronized void doSomething() { System.out.println(toString() + ": calling doSomething"); super.doSomething(); } } ��������

5288B5287B5286B5249B5230B5229B5200B5148B4916B4722B4721B4720B4719B4594B4593B4569B4568B4567B45 66B4565B4564B4427B4426B4410B4409B4345B4218B4217B4216B4215B3636B3635B3469B3468B3465B3455B3454 19 B3453B3449B3221B3220B3219B3214B3059B3058B3057B2535B2534B2190B2189B2188B2-14 BChapter2.Thread Safety 2.4.Guarding State with Locks Because locks enable serialized Is)access to the code paths they guard,we can use them to construct protocols for guaranteeing exclusive access to shared state.Following these protocols consistently can ensure state consistency. [8]Serializing access to an object has nothing to do with object serialization(turning an object into a byte stream);serializing access means that threads take turns accessing the object exclusively,rather than doing so concurrently. Compound actions on shared state,such as incrementing a hit counter(read-modify-write)or lazy initialization(check- then-act),must be made atomic to avoid race conditions.Holding a lock for the entire duration of a compound action can make that compound action atomic.However,just wrapping the compound action with a synchronized block is not sufficient;if synchronization is used to coordinate access to a variable,it is needed everywhere that variable is accessed. Further,when using locks to coordinate access to a variable,the same lock must be used wherever that variable is accessed. It is a common mistake to assume that synchronization needs to be used only when writing to shared variables;this is simply not true.(The reasons for this will become clearer in Section 3.1.) For each mutable state variable that may be accessed by more than one thread,all accesses to that variable must be performed with the same lock held.In this case,we say that the variable is guarded by that lock. In synchronizedFactorizer in Listing 2.6,lastNumber and lastFactors are guarded by the servlet object's intrinsic lock;this is documented by the @GuardedBy annotation. There is no inherent relationship between an object's intrinsic lock and its state;an object's fields need not be guarded by its intrinsic lock,though this is a perfectly valid locking convention that is used by many classes.Acquiring the lock associated with an object does not prevent other threads from accessing that object-the only thing that acquiring a lock prevents any other thread from doing is acquiring that same lock.The fact that every object has a built-in lock is just a convenience so that you needn't explicitly create lock objects.It is up to you to construct locking protocols or synchronization policies that let you access shared state safely,and to use them consistently throughout your program. [9]In retrospect,this design decision was probably a bad one:not only can it be confusing,but it forces JVM implementers to make tradeoffs between object size and locking performance. Every shared,mutable variable should be guarded by exactly one lock.Make it clear to maintainers which lock that is. A common locking convention is to encapsulate all mutable state within an object and to protect it from concurrent access by synchronizing any code path that accesses mutable state using the object's intrinsic lock.This pattern is used by many thread-safe classes,such as vector and other synchronized collection classes.In such cases,all the variables in an object's state are guarded by the object's intrinsic lock.However,there is nothing special about this pattern,and neither the compiler nor the runtime enforces this (or any other)pattern of locking.It is also easy to subvert this locking protocol accidentally by adding a new method or code path and forgetting to use synchronization. [10]Code auditing tools like FindBugs can identify when a variable is frequently but not always accessed with a lock held,which may indicate a bug· Not all data needs to be guarded by locks-only mutable data that will be accessed from multiple threads.In Chapter 1, we described how adding a simple asynchronous event such as a TimerTask can create thread safety requirements that ripple throughout your program,especially if your program state is poorly encapsulated.Consider a single-threaded program that processes a large amount of data.Single-threaded programs require no synchronization,because no data is shared across threads.Now imagine you want to add a feature to create periodic snapshots of its progress,so that it does not have to start again from the beginning if it crashes or must be stopped.You might choose to do this with a TimerTask that goes off every ten minutes,saving the program state to a file. Since the TimerTask will be called from another thread (one managed by Timer),any data involved in the snapshot is now accessed by two threads:the main program thread and the Timer thread.This means that not only must the TimerTask code use synchronization when accessing the program state,but so must any code path in the rest of the program that touches that same data.What used to require no synchronization now requires synchronization throughout the program

19 5288B5287B5286B5249B5230B5229B5200B5148B4916B4722B4721B4720B4719B4594B4593B4569B4568B4567B45 66B4565B4564B4427B4426B4410B4409B4345B4218B4217B4216B4215B3636B3635B3469B3468B3465B3455B3454 B3453B3449B3221B3220B3219B3214B3059B3058B3057B2535B2534B2190B2189B2188B2Ͳ14BChapter 2. Thread Safety 2.4. Guarding State with Locks Because locks enable serialized [8] access to the code paths they guard, we can use them to construct protocols for guaranteeing exclusive access to shared state. Following these protocols consistently can ensure state consistency. [8] Serializing access to an object has nothing to do with object serialization (turning an object into a byte stream); serializing access means that threads take turns accessing the object exclusively, rather than doing so concurrently. Compound actions on shared state, such as incrementing a hit counter (readͲmodifyͲwrite) or lazy initialization (checkͲ thenͲact), must be made atomic to avoid race conditions. Holding a lock for the entire duration of a compound action can make that compound action atomic. However, just wrapping the compound action with a synchronized block is not sufficient; if synchronization is used to coordinate access to a variable, it is needed everywhere that variable is accessed. Further, when using locks to coordinate access to a variable, the same lock must be used wherever that variable is accessed. It is a common mistake to assume that synchronization needs to be used only when writing to shared variables; this is simply not true. (The reasons for this will become clearer in Section 3.1.) For each mutable state variable that may be accessed by more than one thread, all accesses to that variable must be performed with the same lock held. In this case, we say that the variable is guarded by that lock. In SynchronizedFactorizer in Listing 2.6, lastNumber and lastFactors are guarded by the servlet object's intrinsic lock; this is documented by the @GuardedBy annotation. There is no inherent relationship between an object's intrinsic lock and its state; an object's fields need not be guarded by its intrinsic lock, though this is a perfectly valid locking convention that is used by many classes. Acquiring the lock associated with an object does not prevent other threads from accessing that object Ͳthe only thing that acquiring a lock prevents any other thread from doing is acquiring that same lock. The fact that every object has a builtͲin lock is just a convenience so that you needn't explicitly create lock objects. [9] It is up to you to construct locking protocols or synchronization policies that let you access shared state safely, and to use them consistently throughout your program. [9] In retrospect, this design decision was probably a bad one: not only can it be confusing, but it forces JVM implementers to make tradeoffs between object size and locking performance. Every shared, mutable variable should be guarded by exactly one lock. Make it clear to maintainers which lock that is. A common locking convention is to encapsulate all mutable state within an object and to protect it from concurrent access by synchronizing any code path that accesses mutable state using the object's intrinsic lock. This pattern is used by many threadͲsafe classes, such as Vector and other synchronized collection classes. In such cases, all the variables in an object's state are guarded by the object's intrinsic lock. However, there is nothing special about this pattern, and neither the compiler nor the runtime enforces this (or any other) pattern of locking. [10] It is also easy to subvert this locking protocol accidentally by adding a new method or code path and forgetting to use synchronization. [10] Code auditing tools like FindBugs can identify when a variable is frequently but not always accessed with a lock held, which may indicate a bug. Not all data needs to be guarded by locksͲonly mutable data that will be accessed from multiple threads. In Chapter 1, we described how adding a simple asynchronous event such as a TimerTask can create thread safety requirements that ripple throughout your program, especially if your program state is poorly encapsulated. Consider a singleͲthreaded program that processes a large amount of data. SingleͲthreaded programs require no synchronization, because no data is shared across threads. Now imagine you want to add a feature to create periodic snapshots of its progress, so that it does not have to start again from the beginning if it crashes or must be stopped. You might choose to do this with a TimerTask that goes off every ten minutes, saving the program state to a file. Since the TimerTask will be called from another thread (one managed by Timer), any data involved in the snapshot is now accessed by two threads: the main program thread and the Timer thread. This means that not only must the TimerTask code use synchronization when accessing the program state, but so must any code path in the rest of the program that touches that same data. What used to require no synchronization now requires synchronization throughout the program.������

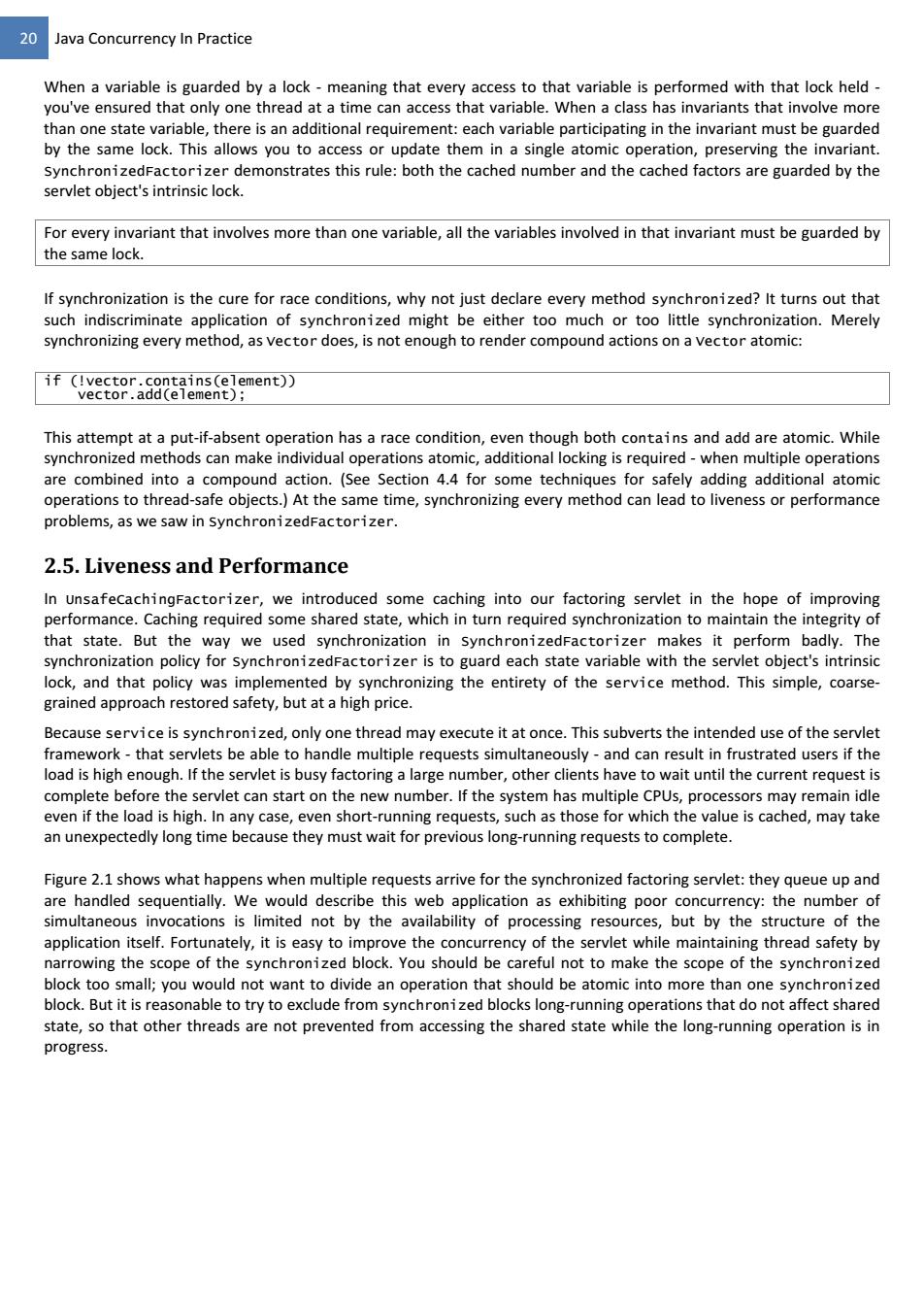

20 Java Concurrency In Practice When a variable is guarded by a lock-meaning that every access to that variable is performed with that lock held- you've ensured that only one thread at a time can access that variable.When a class has invariants that involve more than one state variable,there is an additional requirement:each variable participating in the invariant must be guarded by the same lock.This allows you to access or update them in a single atomic operation,preserving the invariant. synchronizedFactorizer demonstrates this rule:both the cached number and the cached factors are guarded by the servlet object's intrinsic lock. For every invariant that involves more than one variable,all the variables involved in that invariant must be guarded by the same lock. If synchronization is the cure for race conditions,why not just declare every method synchronized?It turns out that such indiscriminate application of synchronized might be either too much or too little synchronization.Merely synchronizing every method,as vector does,is not enough to render compound actions on a vector atomic: if (!vector.contains(element)) vector.add(element); This attempt at a put-if-absent operation has a race condition,even though both contains and add are atomic.While synchronized methods can make individual operations atomic,additional locking is required-when multiple operations are combined into a compound action.(See Section 4.4 for some techniques for safely adding additional atomic operations to thread-safe objects.)At the same time,synchronizing every method can lead to liveness or performance problems,as we saw in SynchronizedFactorizer. 2.5.Liveness and Performance In unsafecachingFactorizer,we introduced some caching into our factoring servlet in the hope of improving performance.Caching required some shared state,which in turn required synchronization to maintain the integrity of that state.But the way we used synchronization in synchronizedFactorizer makes it perform badly.The synchronization policy for synchronizedFactorizer is to guard each state variable with the servlet object's intrinsic lock,and that policy was implemented by synchronizing the entirety of the service method.This simple,coarse- grained approach restored safety,but at a high price. Because service is synchronized,only one thread may execute it at once.This subverts the intended use of the servlet framework-that servlets be able to handle multiple requests simultaneously-and can result in frustrated users if the load is high enough.If the servlet is busy factoring a large number,other clients have to wait until the current request is complete before the servlet can start on the new number.If the system has multiple CPUs,processors may remain idle even if the load is high.In any case,even short-running requests,such as those for which the value is cached,may take an unexpectedly long time because they must wait for previous long-running requests to complete. Figure 2.1 shows what happens when multiple requests arrive for the synchronized factoring servlet:they queue up and are handled sequentially.We would describe this web application as exhibiting poor concurrency:the number of simultaneous invocations is limited not by the availability of processing resources,but by the structure of the application itself.Fortunately,it is easy to improve the concurrency of the servlet while maintaining thread safety by narrowing the scope of the synchronized block.You should be careful not to make the scope of the synchronized block too small;you would not want to divide an operation that should be atomic into more than one synchronized block.But it is reasonable to try to exclude from synchronized blocks long-running operations that do not affect shared state,so that other threads are not prevented from accessing the shared state while the long-running operation is in progress

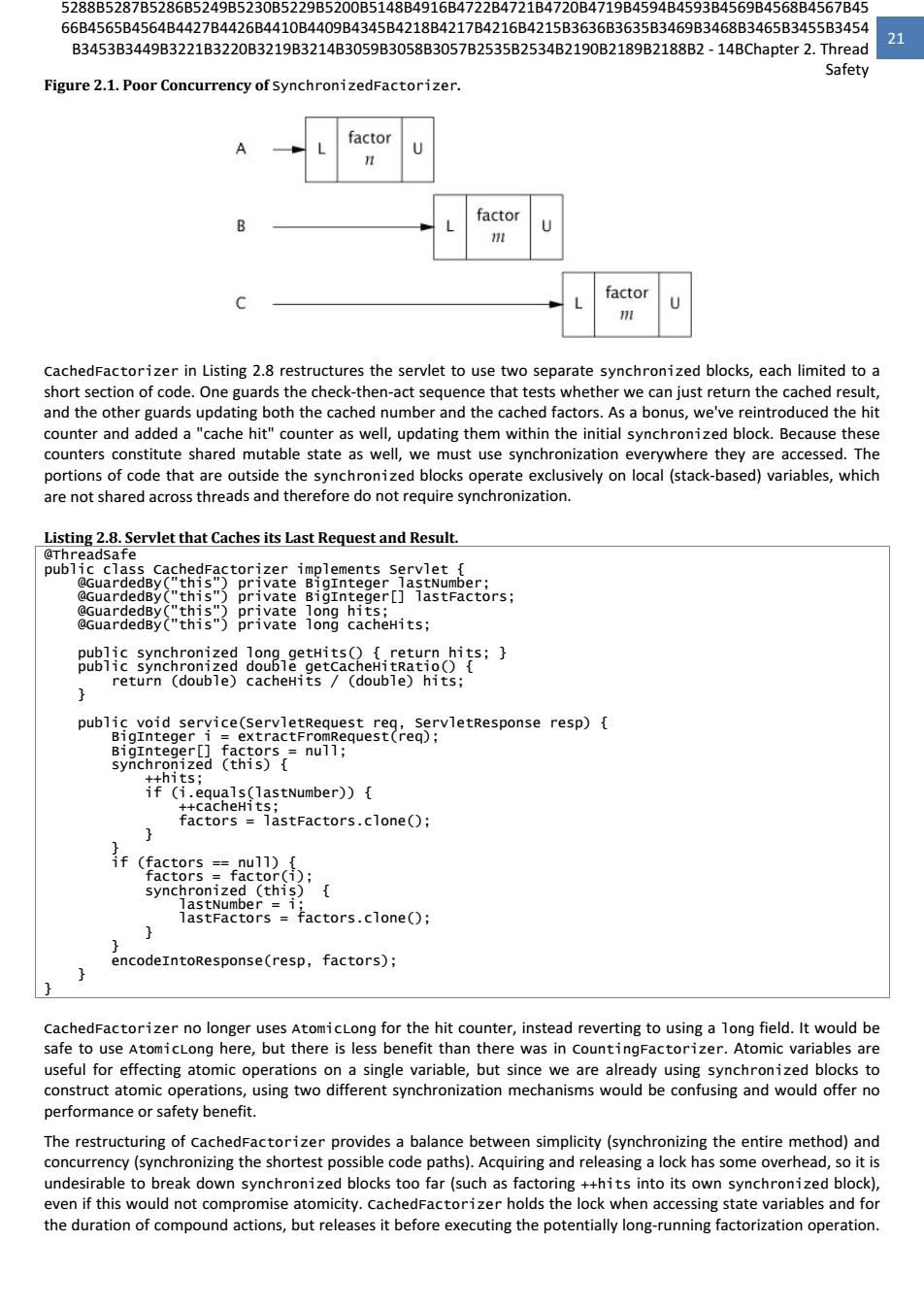

20 Java Concurrency In Practice When a variable is guarded by a lock Ͳmeaning that every access to that variable is performed with that lock held Ͳ you've ensured that only one thread at a time can access that variable. When a class has invariants that involve more than one state variable, there is an additional requirement: each variable participating in the invariant must be guarded by the same lock. This allows you to access or update them in a single atomic operation, preserving the invariant. SynchronizedFactorizer demonstrates this rule: both the cached number and the cached factors are guarded by the servlet object's intrinsic lock. For every invariant that involves more than one variable, all the variables involved in that invariant must be guarded by the same lock. If synchronization is the cure for race conditions, why not just declare every method synchronized? It turns out that such indiscriminate application of synchronized might be either too much or too little synchronization. Merely synchronizing every method, as Vector does, is not enough to render compound actions on a Vector atomic: if (!vector.contains(element)) vector.add(element); This attempt at a putͲifͲabsent operation has a race condition, even though both contains and add are atomic. While synchronized methods can make individual operations atomic, additional locking is requiredͲwhen multiple operations are combined into a compound action. (See Section 4.4 for some techniques for safely adding additional atomic operations to threadͲsafe objects.) At the same time, synchronizing every method can lead to liveness or performance problems, as we saw in SynchronizedFactorizer. 2.5. Liveness and Performance In UnsafeCachingFactorizer, we introduced some caching into our factoring servlet in the hope of improving performance. Caching required some shared state, which in turn required synchronization to maintain the integrity of that state. But the way we used synchronization in SynchronizedFactorizer makes it perform badly. The synchronization policy for SynchronizedFactorizer is to guard each state variable with the servlet object's intrinsic lock, and that policy was implemented by synchronizing the entirety of the service method. This simple, coarseͲ grained approach restored safety, but at a high price. Because service is synchronized, only one thread may execute it at once. This subverts the intended use of the servlet frameworkͲthat servlets be able to handle multiple requests simultaneouslyͲand can result in frustrated users if the load is high enough. If the servlet is busy factoring a large number, other clients have to wait until the current request is complete before the servlet can start on the new number. If the system has multiple CPUs, processors may remain idle even if the load is high. In any case, even shortͲrunning requests, such as those for which the value is cached, may take an unexpectedly long time because they must wait for previous longͲrunning requests to complete. Figure 2.1 shows what happens when multiple requests arrive for the synchronized factoring servlet: they queue up and are handled sequentially. We would describe this web application as exhibiting poor concurrency: the number of simultaneous invocations is limited not by the availability of processing resources, but by the structure of the application itself. Fortunately, it is easy to improve the concurrency of the servlet while maintaining thread safety by narrowing the scope of the synchronized block. You should be careful not to make the scope of the synchronized block too small; you would not want to divide an operation that should be atomic into more than one synchronized block. But it is reasonable to try to exclude from synchronized blocks longͲrunning operations that do not affect shared state, so that other threads are not prevented from accessing the shared state while the longͲrunning operation is in progress.����������

5288B5287B5286B5249B5230B5229B5200B5148B4916B4722B4721B4720B4719B4594B4593B4569B4568B4567B45 66B4565B4564B4427B4426B4410B4409B4345B4218B4217B4216B4215B3636B3635B3469B3468B3465B3455B3454 B3453B3449B3221B3220B3219B3214B3059B3058B3057B2535B2534B2190B2189B2188B2-14 BChapter2.Thread 21 Safety Figure 2.1.Poor Concurrency of synchronizedFactorizer. factor U B factor U 拉 C factor U L CachedFactorizer in Listing 2.8 restructures the servlet to use two separate synchronized blocks,each limited to a short section of code.One guards the check-then-act sequence that tests whether we can just return the cached result, and the other guards updating both the cached number and the cached factors.As a bonus,we've reintroduced the hit counter and added a"cache hit"counter as well,updating them within the initial synchronized block.Because these counters constitute shared mutable state as well,we must use synchronization everywhere they are accessed.The portions of code that are outside the synchronized blocks operate exclusively on local(stack-based)variables,which are not shared across threads and therefore do not require synchronization. Listing 2.8.Servlet that Caches its Last Request and Result @Threadsafe public class CachedFactorizer implements servlet @GuardedBy("this")private BigInteger lastNumber: @GuardedBy("this") private BigInteger[]lastFactors; @GuardedBy("this") private long hits: @GuardedBy("this") private long cacheHits; public synchronized long_getHits(){return hits; public synchronized double getcacheHitRatio() return (double)cacheHits (double)hits; public void service(servletRequest reg,servletResponse resp){ BigInteger i extractFromRequest(req); BigInteger[] factors null; synchronized (this){ ++hits; if (i.equals(lastNumber)){ ++cacheHits; factors lastFactors.clone(; if (factors ==null) factors factor(i); synchronized (this){ lastNumber =i; lastFactors factors.clone(; } encodeIntoResponse(resp,factors); CachedFactorizer no longer uses AtomicLong for the hit counter,instead reverting to using a long field.It would be safe to use AtomicLong here,but there is less benefit than there was in countingFactorizer.Atomic variables are useful for effecting atomic operations on a single variable,but since we are already using synchronized blocks to construct atomic operations,using two different synchronization mechanisms would be confusing and would offer no performance or safety benefit. The restructuring of cachedFactorizer provides a balance between simplicity(synchronizing the entire method)and concurrency (synchronizing the shortest possible code paths).Acquiring and releasing a lock has some overhead,so it is undesirable to break down synchronized blocks too far(such as factoring ++hits into its own synchronized block), even if this would not compromise atomicity.CachedFactorizer holds the lock when accessing state variables and for the duration of compound actions,but releases it before executing the potentially long-running factorization operation

21 5288B5287B5286B5249B5230B5229B5200B5148B4916B4722B4721B4720B4719B4594B4593B4569B4568B4567B45 66B4565B4564B4427B4426B4410B4409B4345B4218B4217B4216B4215B3636B3635B3469B3468B3465B3455B3454 B3453B3449B3221B3220B3219B3214B3059B3058B3057B2535B2534B2190B2189B2188B2Ͳ14BChapter 2. Thread Safety Figure 2.1. Poor Concurrency of SynchronizedFactorizer. CachedFactorizer in Listing 2.8 restructures the servlet to use two separate synchronized blocks, each limited to a short section of code. One guards the checkͲthenͲact sequence that tests whether we can just return the cached result, and the other guards updating both the cached number and the cached factors. As a bonus, we've reintroduced the hit counter and added a "cache hit" counter as well, updating them within the initial synchronized block. Because these counters constitute shared mutable state as well, we must use synchronization everywhere they are accessed. The portions of code that are outside the synchronized blocks operate exclusively on local (stackͲbased) variables, which are not shared across threads and therefore do not require synchronization. Listing 2.8. Servlet that Caches its Last Request and Result. @ThreadSafe public class CachedFactorizer implements Servlet { @GuardedBy("this") private BigInteger lastNumber; @GuardedBy("this") private BigInteger[] lastFactors; @GuardedBy("this") private long hits; @GuardedBy("this") private long cacheHits; public synchronized long getHits() { return hits; } public synchronized double getCacheHitRatio() { return (double) cacheHits / (double) hits; } public void service(ServletRequest req, ServletResponse resp) { BigInteger i = extractFromRequest(req); BigInteger[] factors = null; synchronized (this) { ++hits; if (i.equals(lastNumber)) { ++cacheHits; factors = lastFactors.clone(); } } if (factors == null) { factors = factor(i); synchronized (this) { lastNumber = i; lastFactors = factors.clone(); } } encodeIntoResponse(resp, factors); } } CachedFactorizer no longer uses AtomicLong for the hit counter, instead reverting to using a long field. It would be safe to use AtomicLong here, but there is less benefit than there was in CountingFactorizer. Atomic variables are useful for effecting atomic operations on a single variable, but since we are already using synchronized blocks to construct atomic operations, using two different synchronization mechanisms would be confusing and would offer no performance or safety benefit. The restructuring of CachedFactorizer provides a balance between simplicity (synchronizing the entire method) and concurrency (synchronizing the shortest possible code paths). Acquiring and releasing a lock has some overhead, so it is undesirable to break down synchronized blocks too far (such as factoring ++hits into its own synchronized block), even if this would not compromise atomicity. CachedFactorizer holds the lock when accessing state variables and for the duration of compound actions, but releases it before executing the potentially longͲrunning factorization operation.��