variety of examples of parallel programs Non-numerical Algorithms Chapters 9-12 present parallel non-numerical algorithms.Chapter 9 addresses sorting algorithms such as bitonic sort,bubble sort and its variants,quicksort,sample sort,and shellsort.Chapter 10 describes algorithms for various graph theory problems such as minimum spanning tree,shortest paths,and connected components.Algorithms for sparse graphs are also discussed.Chapter 11 addresses search-based methods such as branch-and-bound and heuristic search for combinatorial problems.Chapter 12 classifies and presents parallel formulations for a variety of dynamic programming algorithms. Numerical Algorithms Chapters 8 and 13 present parallel numerical algorithms.Chapter 8 covers basic operations on dense matrices such as matrix multiplication,matrix-vector multiplication,and Gaussian elimination.This chapter is included before non-numerical algorithms,as the techniques for partitioning and assigning matrices to processors are common to many non-numerical algorithms.Furthermore, matrix-vector and matrix-matrix multiplication algorithms form the kernels of many graph algorithms. Chapter 13 describes algorithms for computing Fast Fourier Transforms. Team LiB 4 PREVIOUS NEXT Team LiB 4 PREVIOUS NEXT 1.4 Bibliographic Remarks Many books discuss aspects of parallel processing at varying levels of detail.Hardware aspects of parallel computers have been discussed extensively in several textbooks and monographs [CSG98,LW95,HX98 AG94,Fly95,AG94,Sto93,DeC89,HB84,RF89,Sie85,Tab90,Tab91,WF84,Woo86].A number of texts discuss paradigms and languages for programming parallel computers [LB98,Pac98,GLS99,GSNL98, CDK+00,WA98,And91,BA82,Bab88,Ble90,Con89,CT92,Les93,Per87,Wal91].Akl [Akl97],Cole [Col89],Gibbons and Rytter [GR90],Foster [Fos95],Leighton [Lei92],Miller and Stout [MS96],and Quinn [Qui94]discuss various aspects of parallel algorithm design and analysis.Buyya(Editor)[Buy99]and Pfister [Pfi98]discuss various aspects of parallel computing using clusters.Jaja [Jaj92]covers parallel algorithms for the PRAM model of computation.Hillis [Hil85,HS86]and Hatcher and Quinn [HQ91]discuss data-parallel programming.Agha [Agh86]discusses a model of concurrent computation based on actors. Sharp [Sha85]addresses data-flow computing.Some books provide a general overview of topics in parallel computing [CL93,Fou94,Zom96,JGD87,LER92,Mol93,Qui94].Many books address parallel processing applications in numerical analysis and scientific computing [DDSV99,FJDS96,GO93,Car89]. Fox et al.[F]L88]and Angus et al.[AFKW90]provide an application-oriented view of algorithm design for problems in scientific computing.Bertsekas and Tsitsiklis [BT97]discuss parallel algorithms,with emphasis on numerical applications. Akl and Lyons [AL93]discuss parallel algorithms in computational geometry.Ranka and Sahni [RS90b] and Dew,Earnshaw,and Heywood [DEH89]address parallel algorithms for use in computer vision.Green [Gre91]covers parallel algorithms for graphics applications.Many books address the use of parallel processing in artificial intelligence applications [Gup87,HD89b,KGK90,KKKS94,Kow88,RZ89]. A useful collection of reviews,bibliographies and indexes has been put together by the Association for Computing Machinery [ACM91].Messina and Murli [MM91]present a collection of papers on various aspects of the application and potential of parallel computing.The scope of parallel processing and various aspects of US government support have also been discussed in National Science Foundation reports [NSF91,GOV99] A number of conferences address various aspects of parallel computing.A few important ones are the Supercomputing Conference,ACM Symposium on Parallel Algorithms and Architectures,the International Conference on Parallel Processing,the International Parallel and Distributed Processing Symposium, Parallel Computing,and the SIAM Conference on Parallel Processing.Important journals in parallel processing include IEEE Transactions on Parallel and Distributed Systems,International Journal of Parallel Programming,Journal of Parallel and Distributed Computing,Parallel Computing,IEEE Concurrency,and Parallel Processing Letters.These proceedings and journals provide a rich source of information on the state of the art in parallel processing. Team LiB 4 PREVIOUS NEXT Team LiB 4 PREVIOUS NEXT Problems 1.1 Go to the Top 500 Supercomputers site(http://www.top500.org/)and list the five most

variety of examples of parallel programs. Non-numerical Algorithms Chapters 9–12 present parallel non-numerical algorithms. Chapter 9 addresses sorting algorithms such as bitonic sort, bubble sort and its variants, quicksort, sample sort, and shellsort. Chapter 10 describes algorithms for various graph theory problems such as minimum spanning tree, shortest paths, and connected components. Algorithms for sparse graphs are also discussed. Chapter 11 addresses search-based methods such as branch-and-bound and heuristic search for combinatorial problems. Chapter 12 classifies and presents parallel formulations for a variety of dynamic programming algorithms. Numerical Algorithms Chapters 8 and 13 present parallel numerical algorithms. Chapter 8 covers basic operations on dense matrices such as matrix multiplication, matrix-vector multiplication, and Gaussian elimination. This chapter is included before non-numerical algorithms, as the techniques for partitioning and assigning matrices to processors are common to many non-numerical algorithms. Furthermore, matrix-vector and matrix-matrix multiplication algorithms form the kernels of many graph algorithms. Chapter 13 describes algorithms for computing Fast Fourier Transforms. [ Team LiB ] [ Team LiB ] 1.4 Bibliographic Remarks Many books discuss aspects of parallel processing at varying levels of detail. Hardware aspects of parallel computers have been discussed extensively in several textbooks and monographs [CSG98, LW95, HX98, AG94, Fly95, AG94, Sto93, DeC89, HB84, RF89, Sie85, Tab90, Tab91, WF84, Woo86]. A number of texts discuss paradigms and languages for programming parallel computers [LB98, Pac98, GLS99, GSNL98, CDK+00, WA98, And91, BA82, Bab88, Ble90, Con89, CT92, Les93, Per87, Wal91]. Akl [Akl97], Cole [Col89], Gibbons and Rytter [GR90], Foster [Fos95], Leighton [Lei92], Miller and Stout [MS96], and Quinn [Qui94] discuss various aspects of parallel algorithm design and analysis. Buyya (Editor) [Buy99] and Pfister [Pfi98] discuss various aspects of parallel computing using clusters. Jaja [Jaj92] covers parallel algorithms for the PRAM model of computation. Hillis [Hil85, HS86] and Hatcher and Quinn [HQ91] discuss data-parallel programming. Agha [Agh86] discusses a model of concurrent computation based on actors. Sharp [Sha85] addresses data-flow computing. Some books provide a general overview of topics in parallel computing [CL93, Fou94, Zom96, JGD87, LER92, Mol93, Qui94]. Many books address parallel processing applications in numerical analysis and scientific computing [DDSV99, FJDS96, GO93, Car89]. Fox et al. [FJL+88] and Angus et al. [AFKW90] provide an application-oriented view of algorithm design for problems in scientific computing. Bertsekas and Tsitsiklis [BT97] discuss parallel algorithms, with emphasis on numerical applications. Akl and Lyons [AL93] discuss parallel algorithms in computational geometry. Ranka and Sahni [RS90b] and Dew, Earnshaw, and Heywood [DEH89] address parallel algorithms for use in computer vision. Green [Gre91] covers parallel algorithms for graphics applications. Many books address the use of parallel processing in artificial intelligence applications [Gup87, HD89b, KGK90, KKKS94, Kow88, RZ89]. A useful collection of reviews, bibliographies and indexes has been put together by the Association for Computing Machinery [ACM91]. Messina and Murli [MM91] present a collection of papers on various aspects of the application and potential of parallel computing. The scope of parallel processing and various aspects of US government support have also been discussed in National Science Foundation reports [NSF91, GOV99]. A number of conferences address various aspects of parallel computing. A few important ones are the Supercomputing Conference, ACM Symposium on Parallel Algorithms and Architectures, the International Conference on Parallel Processing, the International Parallel and Distributed Processing Symposium, Parallel Computing, and the SIAM Conference on Parallel Processing. Important journals in parallel processing include IEEE Transactions on Parallel and Distributed Systems, International Journal of Parallel Programming, Journal of Parallel and Distributed Computing, Parallel Computing, IEEE Concurrency, and Parallel Processing Letters. These proceedings and journals provide a rich source of information on the state of the art in parallel processing. [ Team LiB ] [ Team LiB ] Problems 1.1 Go to the Top 500 Supercomputers site (http://www.top500.org/) and list the five most Page 6 of 7 file://C:\Documents and Settings\CIC000\Local Settings\Temp\~hhA83F.htm 2/8/2013

powerful supercomputers along with their FLOPS rating. 1.2 List three major problems requiring the use of supercomputing in the following domains: 1.Structural Mechanics. 2.Computational Biology. 3.Commercial Applications. 1.3 Collect statistics on the number of components in state of the art integrated circuits over the years.Plot the number of components as a function of time and compare the growth rate to that dictated by Moore's law. 1.4 Repeat the above experiment for the peak FLOPS rate of processors and compare the speed to that inferred from Moore's law. Team LiB 1 PREVIOUS NEXT

powerful supercomputers along with their FLOPS rating. 1.2 List three major problems requiring the use of supercomputing in the following domains: 1. Structural Mechanics. 2. Computational Biology. 3. Commercial Applications. 1.3 Collect statistics on the number of components in state of the art integrated circuits over the years. Plot the number of components as a function of time and compare the growth rate to that dictated by Moore's law. 1.4 Repeat the above experiment for the peak FLOPS rate of processors and compare the speed to that inferred from Moore's law. [ Team LiB ] Page 7 of 7 file://C:\Documents and Settings\CIC000\Local Settings\Temp\~hhA83F.htm 2/8/2013

Team LiB 1 4 PREVIOUS NEXT Chapter 2.Parallel Programming Platforms The traditional logical view of a sequential computer consists of a memory connected to a processor via a datapath.All three components-processor,memory,and datapath-present bottlenecks to the overall processing rate of a computer system.A number of architectural innovations over the years have addressed these bottlenecks.One of the most important innovations is multiplicity -in processing units, datapaths,and memory units.This multiplicity is either entirely hidden from the programmer,as in the case of implicit parallelism,or exposed to the programmer in different forms.In this chapter,we present an overview of important architectural concepts as they relate to parallel processing.The objective is to provide sufficient detail for programmers to be able to write efficient code on a variety of platforms.We develop cost models and abstractions for quantifying the performance of various parallel algorithms,and identify bottlenecks resulting from various programming constructs. We start our discussion of parallel platforms with an overview of serial and implicitly parallel architectures. This is necessitated by the fact that it is often possible to re-engineer codes to achieve significant speedups(2 x to 5 x unoptimized speed)using simple program transformations.Parallelizing sub-optimal serial codes often has undesirable effects of unreliable speedups and misleading runtimes.For this reason, we advocate optimizing serial performance of codes before attempting parallelization.As we shall demonstrate through this chapter,the tasks of serial and parallel optimization often have very similar characteristics.After discussing serial and implicitly parallel architectures,we devote the rest of this chapter to organization of parallel platforms,underlying cost models for algorithms,and platform abstractions for portable algorithm design.Readers wishing to delve directly into parallel architectures may choose to skip Sections 2.1 and 2.2. Team LiB 4 PREVIOUS NEXT Team LiB 4 PREVIOUS NEXT 2.1 Implicit Parallelism:Trends in Microprocessor Architectures* While microprocessor technology has delivered significant improvements in clock speeds over the past decade,it has also exposed a variety of other performance bottlenecks.To alleviate these bottlenecks, microprocessor designers have explored alternate routes to cost-effective performance gains.In this section,we will outline some of these trends with a view to understanding their limitations and how they impact algorithm and code development.The objective here is not to provide a comprehensive description of processor architectures.There are several excellent texts referenced in the bibliography that address this topic. Clock speeds of microprocessors have posted impressive gains-two to three orders of magnitude over the past 20 years.However,these increments in clock speed are severely diluted by the limitations of memory technology.At the same time,higher levels of device integration have also resulted in a very large transistor count,raising the obvious issue of how best to utilize them.Consequently,techniques that enable execution of multiple instructions in a single clock cycle have become popular.Indeed,this trend is evident in the current generation of microprocessors such as the Itanium,Sparc Ultra,MIPS,and Power4. In this section,we briefly explore mechanisms used by various processors for supporting multiple instruction execution. 2.1.1 Pipelining and Superscalar Execution Processors have long relied on pipelines for improving execution rates.By overlapping various stages in instruction execution(fetch,schedule,decode,operand fetch,execute,store,among others),pipelining enables faster execution.The assembly-line analogy works well for understanding pipelines.If the assembly of a car,taking 100 time units,can be broken into 10 pipelined stages of 10 units each,a single assembly line can produce a car every 10 time units!This represents a 10-fold speedup over producing cars entirely serially,one after the other.It is also evident from this example that to increase the speed of a single pipeline,one would break down the tasks into smaller and smaller units,thus lengthening the pipeline and increasing overlap in execution.In the context of processors,this enables faster clock rates since the tasks are now smaller.For example,the Pentium 4,which operates at 2.0 GHz,has a 20 stage

[ Team LiB ] Chapter 2. Parallel Programming Platforms The traditional logical view of a sequential computer consists of a memory connected to a processor via a datapath. All three components – processor, memory, and datapath – present bottlenecks to the overall processing rate of a computer system. A number of architectural innovations over the years have addressed these bottlenecks. One of the most important innovations is multiplicity – in processing units, datapaths, and memory units. This multiplicity is either entirely hidden from the programmer, as in the case of implicit parallelism, or exposed to the programmer in different forms. In this chapter, we present an overview of important architectural concepts as they relate to parallel processing. The objective is to provide sufficient detail for programmers to be able to write efficient code on a variety of platforms. We develop cost models and abstractions for quantifying the performance of various parallel algorithms, and identify bottlenecks resulting from various programming constructs. We start our discussion of parallel platforms with an overview of serial and implicitly parallel architectures. This is necessitated by the fact that it is often possible to re-engineer codes to achieve significant speedups (2 x to 5 x unoptimized speed) using simple program transformations. Parallelizing sub-optimal serial codes often has undesirable effects of unreliable speedups and misleading runtimes. For this reason, we advocate optimizing serial performance of codes before attempting parallelization. As we shall demonstrate through this chapter, the tasks of serial and parallel optimization often have very similar characteristics. After discussing serial and implicitly parallel architectures, we devote the rest of this chapter to organization of parallel platforms, underlying cost models for algorithms, and platform abstractions for portable algorithm design. Readers wishing to delve directly into parallel architectures may choose to skip Sections 2.1 and 2.2. [ Team LiB ] [ Team LiB ] 2.1 Implicit Parallelism: Trends in Microprocessor Architectures* While microprocessor technology has delivered significant improvements in clock speeds over the past decade, it has also exposed a variety of other performance bottlenecks. To alleviate these bottlenecks, microprocessor designers have explored alternate routes to cost-effective performance gains. In this section, we will outline some of these trends with a view to understanding their limitations and how they impact algorithm and code development. The objective here is not to provide a comprehensive description of processor architectures. There are several excellent texts referenced in the bibliography that address this topic. Clock speeds of microprocessors have posted impressive gains - two to three orders of magnitude over the past 20 years. However, these increments in clock speed are severely diluted by the limitations of memory technology. At the same time, higher levels of device integration have also resulted in a very large transistor count, raising the obvious issue of how best to utilize them. Consequently, techniques that enable execution of multiple instructions in a single clock cycle have become popular. Indeed, this trend is evident in the current generation of microprocessors such as the Itanium, Sparc Ultra, MIPS, and Power4. In this section, we briefly explore mechanisms used by various processors for supporting multiple instruction execution. 2.1.1 Pipelining and Superscalar Execution Processors have long relied on pipelines for improving execution rates. By overlapping various stages in instruction execution (fetch, schedule, decode, operand fetch, execute, store, among others), pipelining enables faster execution. The assembly-line analogy works well for understanding pipelines. If the assembly of a car, taking 100 time units, can be broken into 10 pipelined stages of 10 units each, a single assembly line can produce a car every 10 time units! This represents a 10-fold speedup over producing cars entirely serially, one after the other. It is also evident from this example that to increase the speed of a single pipeline, one would break down the tasks into smaller and smaller units, thus lengthening the pipeline and increasing overlap in execution. In the context of processors, this enables faster clock rates since the tasks are now smaller. For example, the Pentium 4, which operates at 2.0 GHz, has a 20 stage Page 1 of 56 file://C:\Documents and Settings\CIC000\Local Settings\Temp\~hh1AB0.htm 2/8/2013

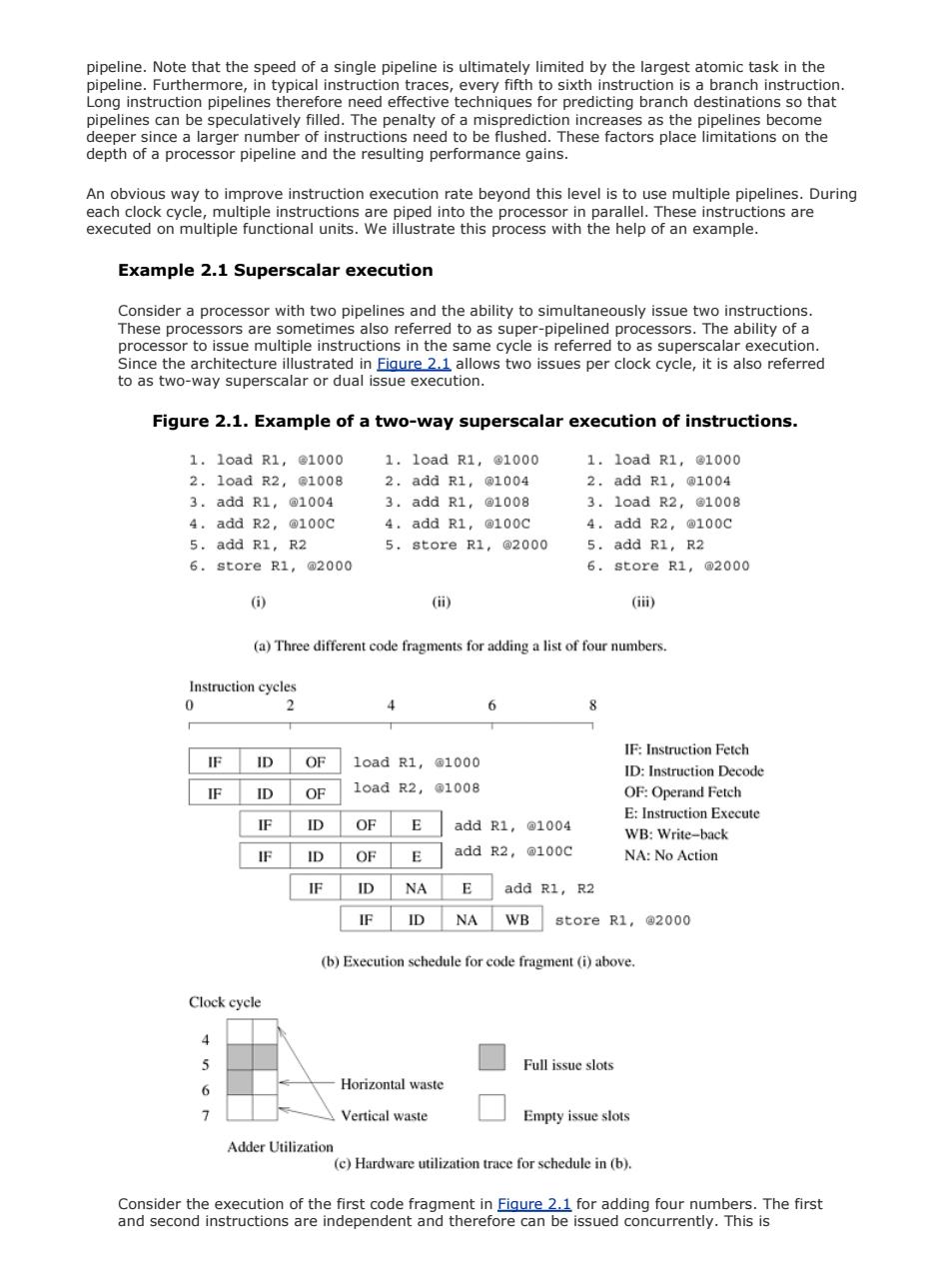

pipeline.Note that the speed of a single pipeline is ultimately limited by the largest atomic task in the pipeline.Furthermore,in typical instruction traces,every fifth to sixth instruction is a branch instruction Long instruction pipelines therefore need effective techniques for predicting branch destinations so that pipelines can be speculatively filled.The penalty of a misprediction increases as the pipelines become deeper since a larger number of instructions need to be flushed.These factors place limitations on the depth of a processor pipeline and the resulting performance gains. An obvious way to improve instruction execution rate beyond this level is to use multiple pipelines.During each clock cycle,multiple instructions are piped into the processor in parallel.These instructions are executed on multiple functional units.We illustrate this process with the help of an example. Example 2.1 Superscalar execution Consider a processor with two pipelines and the ability to simultaneously issue two instructions. These processors are sometimes also referred to as super-pipelined processors.The ability of a processor to issue multiple instructions in the same cycle is referred to as superscalar execution. Since the architecture illustrated in Figure_2.1 allows two issues per clock cycle,it is also referred to as two-way superscalar or dual issue execution. Figure 2.1.Example of a two-way superscalar execution of instructions. 1.1oadR1,@1000 1.1oadR1,@1000 1.1oadR1,©1000 2,1oadR2,1008 2,addR1,@1004 2.,addR1,@1004 3.addR1,@1004 3.addR1,@1008 3.1oadR2,@1008 4.addR2,@100c 4.addR1,@100c 4.addR2,©100c 5.add R1,R2 5,8 tore R1,@2000 5.add Rl,R2 6.store R1,@2000 6.store R1,@2000 ① (i) GiD (a)Three different code fragments for adding a list of four numbers Instruction cycles 0 6 8 入 IF:Instruction Fetch IF ID OF 1oadR1,@1000 ID:Instruction Decode IF D OF 1oadR2,@1008 OF:Operand Fetch E:Instruction Execute F D OF E addR1,@1004 WB:Write-back F ID OF E add R2,@100C NA:No Action F D NA E add R1,R2 F ID NA WB store R1,@2000 (b)Execution schedule for code fragment (i)above. Clock cyele 5 Full issue slots 6 Horizontal waste Vertical waste Empty issue slots Adder Utilization (c)Hardware utilization trace for schedule in (b). Consider the execution of the first code fragment in Figure 2.1 for adding four numbers.The first and second instructions are independent and therefore can be issued concurrently.This is

pipeline. Note that the speed of a single pipeline is ultimately limited by the largest atomic task in the pipeline. Furthermore, in typical instruction traces, every fifth to sixth instruction is a branch instruction. Long instruction pipelines therefore need effective techniques for predicting branch destinations so that pipelines can be speculatively filled. The penalty of a misprediction increases as the pipelines become deeper since a larger number of instructions need to be flushed. These factors place limitations on the depth of a processor pipeline and the resulting performance gains. An obvious way to improve instruction execution rate beyond this level is to use multiple pipelines. During each clock cycle, multiple instructions are piped into the processor in parallel. These instructions are executed on multiple functional units. We illustrate this process with the help of an example. Example 2.1 Superscalar execution Consider a processor with two pipelines and the ability to simultaneously issue two instructions. These processors are sometimes also referred to as super-pipelined processors. The ability of a processor to issue multiple instructions in the same cycle is referred to as superscalar execution. Since the architecture illustrated in Figure 2.1 allows two issues per clock cycle, it is also referred to as two-way superscalar or dual issue execution. Figure 2.1. Example of a two-way superscalar execution of instructions. Consider the execution of the first code fragment in Figure 2.1 for adding four numbers. The first and second instructions are independent and therefore can be issued concurrently. This is Page 2 of 56 file://C:\Documents and Settings\CIC000\Local Settings\Temp\~hh1AB0.htm 2/8/2013

illustrated in the simultaneous issue of the instructions load R1,e1000 and load R2,e1008 at t 0.The instructions are fetched,decoded,and the operands are fetched.The next two instructions,add R1,e1004 and add R2,e100c are also mutually independent,although they must be executed after the first two instructions.Consequently,they can be issued concurrently at t 1 since the processors are pipelined.These instructions terminate at t 5.The next two instructions,add R1,R2 and store R1,02000 cannot be executed concurrently since the result of the former(contents of register R1)is used by the latter.Therefore,only the add instruction is issued at t 2 and the store instruction at t 3.Note that the instruction add R1,R2 can be executed only after the previous two instructions have been executed.The instruction schedule is illustrated in Fiqure 2.1(b).The schedule assumes that each memory access takes a single cycle. In reality,this may not be the case.The implications of this assumption are discussed in Section 2.2 on memory system performance. In principle,superscalar execution seems natural,even simple.However,a number of issues need to be resolved.First,as illustrated in Example 2.1,instructions in a program may be related to each other.The results of an instruction may be required for subsequent instructions.This is referred to as true data dependency.For instance,consider the second code fragment in Figure 2.1 for adding four numbers There is a true data dependency between load R1,e1000 and add R1,e1004,and similarly between subsequent instructions.Dependencies of this type must be resolved before simultaneous issue of instructions.This has two implications.First,since the resolution is done at runtime,it must be supported in hardware.The complexity of this hardware can be high.Second,the amount of instruction level parallelism in a program is often limited and is a function of coding technique.In the second code fragment,there can be no simultaneous issue,leading to poor resource utilization.The three code fragments in Figure 2.1(a)also illustrate that in many cases it is possible to extract more parallelism by reordering the instructions and by altering the code.Notice that in this example the code reorganization corresponds to exposing parallelism in a form that can be used by the instruction issue mechanism. Another source of dependency between instructions results from the finite resources shared by various pipelines.As an example,consider the co-scheduling of two floating point operations on a dual issue machine with a single floating point unit.Although there might be no data dependencies between the instructions,they cannot be scheduled together since both need the floating point unit.This form of dependency in which two instructions compete for a single processor resource is referred to as resource dependency. The flow of control through a program enforces a third form of dependency between instructions.Consider the execution of a conditional branch instruction.Since the branch destination is known only at the point of execution,scheduling instructions a priori across branches may lead to errors.These dependencies are referred to as branch dependencies or procedural dependencies and are typically handled by speculatively scheduling across branches and rolling back in case of errors.Studies of typical traces have shown that on average,a branch instruction is encountered between every five to six instructions. Therefore,just as in populating instruction pipelines,accurate branch prediction is critical for efficient superscalar execution. The ability of a processor to detect and schedule concurrent instructions is critical to superscalar performance.For instance,consider the third code fragment in Fiqure 2.1 which also computes the sum of four numbers.The reader will note that this is merely a semantically equivalent reordering of the first code fragment.However,in this case,there is a data dependency between the first two instructions-load R1, e1000 and add R1,@1004.Therefore,these instructions cannot be issued simultaneously.However,if the processor had the ability to look ahead,it would realize that it is possible to schedule the third instruction -load R2,@1008-with the first instruction.In the next issue cycle,instructions two and four can be scheduled,and so on.In this way,the same execution schedule can be derived for the first and third code fragments.However,the processor needs the ability to issue instructions out-of-order to accomplish desired reordering.The parallelism available in in-order issue of instructions can be highly limited as illustrated by this example.Most current microprocessors are capable of out-of-order issue and completion.This model,also referred to as dynamic instruction issue,exploits maximum instruction level parallelism.The processor uses a window of instructions from which it selects instructions for simultaneous issue.This window corresponds to the look-ahead of the scheduler. The performance of superscalar architectures is limited by the available instruction level parallelism. Consider the example in Fiqure 2.1.For simplicity of discussion,let us ignore the pipelining aspects of the example and focus on the execution aspects of the program.Assuming two execution units(multiply-add units),the figure illustrates that there are several zero-issue cycles(cycles in which the floating point unit is idle).These are essentially wasted cycles from the point of view of the execution unit.If,during a

illustrated in the simultaneous issue of the instructions load R1, @1000 and load R2, @1008 at t = 0. The instructions are fetched, decoded, and the operands are fetched. The next two instructions, add R1, @1004 and add R2, @100C are also mutually independent, although they must be executed after the first two instructions. Consequently, they can be issued concurrently at t = 1 since the processors are pipelined. These instructions terminate at t = 5. The next two instructions, add R1, R2 and store R1, @2000 cannot be executed concurrently since the result of the former (contents of register R1) is used by the latter. Therefore, only the add instruction is issued at t = 2 and the store instruction at t = 3. Note that the instruction add R1, R2 can be executed only after the previous two instructions have been executed. The instruction schedule is illustrated in Figure 2.1(b). The schedule assumes that each memory access takes a single cycle. In reality, this may not be the case. The implications of this assumption are discussed in Section 2.2 on memory system performance. In principle, superscalar execution seems natural, even simple. However, a number of issues need to be resolved. First, as illustrated in Example 2.1, instructions in a program may be related to each other. The results of an instruction may be required for subsequent instructions. This is referred to as true data dependency. For instance, consider the second code fragment in Figure 2.1 for adding four numbers. There is a true data dependency between load R1, @1000 and add R1, @1004, and similarly between subsequent instructions. Dependencies of this type must be resolved before simultaneous issue of instructions. This has two implications. First, since the resolution is done at runtime, it must be supported in hardware. The complexity of this hardware can be high. Second, the amount of instruction level parallelism in a program is often limited and is a function of coding technique. In the second code fragment, there can be no simultaneous issue, leading to poor resource utilization. The three code fragments in Figure 2.1(a) also illustrate that in many cases it is possible to extract more parallelism by reordering the instructions and by altering the code. Notice that in this example the code reorganization corresponds to exposing parallelism in a form that can be used by the instruction issue mechanism. Another source of dependency between instructions results from the finite resources shared by various pipelines. As an example, consider the co-scheduling of two floating point operations on a dual issue machine with a single floating point unit. Although there might be no data dependencies between the instructions, they cannot be scheduled together since both need the floating point unit. This form of dependency in which two instructions compete for a single processor resource is referred to as resource dependency. The flow of control through a program enforces a third form of dependency between instructions. Consider the execution of a conditional branch instruction. Since the branch destination is known only at the point of execution, scheduling instructions a priori across branches may lead to errors. These dependencies are referred to as branch dependencies or procedural dependencies and are typically handled by speculatively scheduling across branches and rolling back in case of errors. Studies of typical traces have shown that on average, a branch instruction is encountered between every five to six instructions. Therefore, just as in populating instruction pipelines, accurate branch prediction is critical for efficient superscalar execution. The ability of a processor to detect and schedule concurrent instructions is critical to superscalar performance. For instance, consider the third code fragment in Figure 2.1 which also computes the sum of four numbers. The reader will note that this is merely a semantically equivalent reordering of the first code fragment. However, in this case, there is a data dependency between the first two instructions – load R1, @1000 and add R1, @1004. Therefore, these instructions cannot be issued simultaneously. However, if the processor had the ability to look ahead, it would realize that it is possible to schedule the third instruction – load R2, @1008 – with the first instruction. In the next issue cycle, instructions two and four can be scheduled, and so on. In this way, the same execution schedule can be derived for the first and third code fragments. However, the processor needs the ability to issue instructions out-of-order to accomplish desired reordering. The parallelism available in in-order issue of instructions can be highly limited as illustrated by this example. Most current microprocessors are capable of out-of-order issue and completion. This model, also referred to as dynamic instruction issue, exploits maximum instruction level parallelism. The processor uses a window of instructions from which it selects instructions for simultaneous issue. This window corresponds to the look-ahead of the scheduler. The performance of superscalar architectures is limited by the available instruction level parallelism. Consider the example in Figure 2.1. For simplicity of discussion, let us ignore the pipelining aspects of the example and focus on the execution aspects of the program. Assuming two execution units (multiply-add units), the figure illustrates that there are several zero-issue cycles (cycles in which the floating point unit is idle). These are essentially wasted cycles from the point of view of the execution unit. If, during a Page 3 of 56 file://C:\Documents and Settings\CIC000\Local Settings\Temp\~hh1AB0.htm 2/8/2013