Long Repeated Strings (Illustrating Section 15.2 Programming of Programming Pearls) Section 15.2 describes long repeated strings in text and gives an example.Here are some more examples,generated by this Second Edition program from several sources. King James Bible Jon Bentley Verse Numbers Included the house of his precious things,the silver,and the gold,and the spices,and the precious ointment,and all the house of his armour,and all that was found in his treasures:there was nothing in his house,nor in all his dominion, that Hezekiah shewed them not. This text is found in 2 Kings 20:13 and in Isaiah 39:2.Each text line in the original file begins with the chapter and verse (i.e.,GEN 1:1 In the beginning God created...").Long repeated strings therefore could not cross verse boundaries;the next experiment deleted those identifiers. Verse Numbers Excluded offered:His offering was one silver charger,the weight whereof was an hundred and thirty shekels,one silver bowl of seventy shekels,after the shekel of the sanctuary;both of them full of fine flour mingled with oil for a meat offering:One golden spoon of ten shekels,full of incense:One young bullock,one ram,one lamb of the first year,for a burnt offering:One kid of the goats for a sin offering:And for a sacrifice of peace offerings,two oxen, five rams,five he goats,five lambs of the first year:this was the offering of Ahi Numbers 7 describes offerings made over a period of twelve days;much of this string appears twelve times. Longest String That Occurs Twelve Times full of incense:One young bullock,one ram,one lamb of the first year,for a burnt offering:One kid of the goats for a sin offering:And for a sacrifice of peace offerings,two oxen,five rams,five he goats,five lambs of the first year:this was the offering of This string occurs twelve times in Numbers 7.This string was computed using the method of Solution 15.8. Programming Pearls

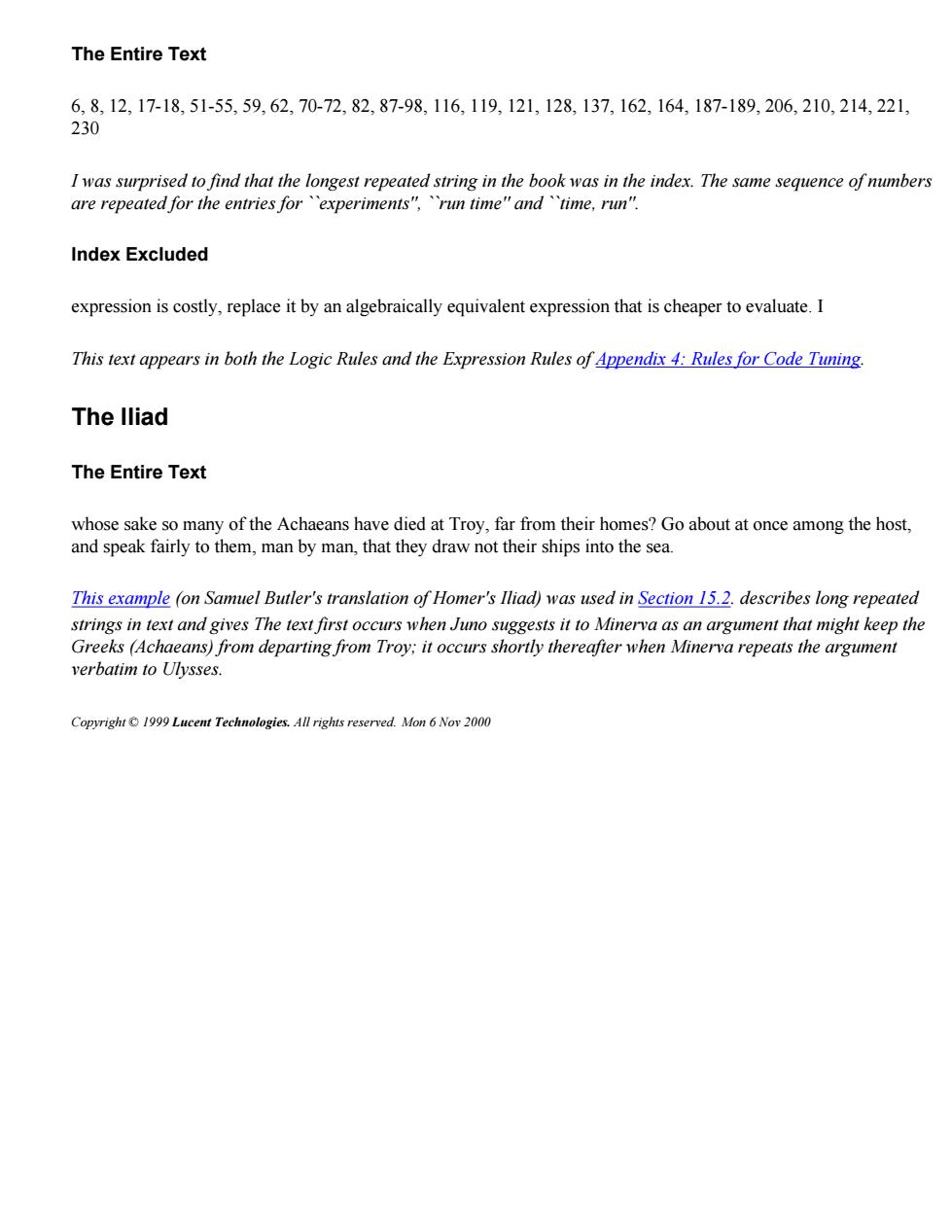

Long Repeated Strings (Illustrating Section 15.2 of Programming Pearls) Section 15.2 describes long repeated strings in text and gives an example. Here are some more examples, generated by this program from several sources. King James Bible Verse Numbers Included the house of his precious things, the silver, and the gold, and the spices, and the precious ointment, and all the house of his armour, and all that was found in his treasures: there was nothing in his house, nor in all his dominion, that Hezekiah shewed them not. This text is found in 2 Kings 20:13 and in Isaiah 39:2. Each text line in the original file begins with the chapter and verse (i.e., ``GEN 1:1 In the beginning God created ...''). Long repeated strings therefore could not cross verse boundaries; the next experiment deleted those identifiers. Verse Numbers Excluded , offered: His offering was one silver charger, the weight whereof was an hundred and thirty shekels, one silver bowl of seventy shekels, after the shekel of the sanctuary; both of them full of fine flour mingled with oil for a meat offering: One golden spoon of ten shekels, full of incense: One young bullock, one ram, one lamb of the first year, for a burnt offering: One kid of the goats for a sin offering: And for a sacrifice of peace offerings, two oxen, five rams, five he goats, five lambs of the first year: this was the offering of Ahi Numbers 7 describes offerings made over a period of twelve days; much of this string appears twelve times. Longest String That Occurs Twelve Times , full of incense: One young bullock, one ram, one lamb of the first year, for a burnt offering: One kid of the goats for a sin offering: And for a sacrifice of peace offerings, two oxen, five rams, five he goats, five lambs of the first year: this was the offering of This string occurs twelve times in Numbers 7. This string was computed using the method of Solution 15.8. Programming Pearls

The Entire Text 6,8,12,17-18,51-55,59,62,70-72,82,87-98,116,119,121,128,137,162,164,187-189,206,210,214,221, 230 I was surprised to find that the longest repeated string in the book was in the index.The same sequence of numbers are repeated for the entries for experiments",run time"andtime,run". Index Excluded expression is costly,replace it by an algebraically equivalent expression that is cheaper to evaluate.I This text appears in both the Logic Rules and the Expression Rules of Appendix 4:Rules for Code Tuning. The lliad The Entire Text whose sake so many of the Achaeans have died at Troy,far from their homes?Go about at once among the host, and speak fairly to them,man by man,that they draw not their ships into the sea. This example (on Samuel Butler's translation of Homer's Iliad)was used in Section 15.2.describes long repeated strings in text and gives The text first occurs when Juno suggests it to Minerva as an argument that might keep the Greeks (Achaeans)from departing from Troy;it occurs shortly thereafter when Minerva repeats the argument verbatim to Ul乃vsses.. Copyright1999 Lucent Technologies.All rights reserved.Mon 6 Noy 2000

The Entire Text 6, 8, 12, 17-18, 51-55, 59, 62, 70-72, 82, 87-98, 116, 119, 121, 128, 137, 162, 164, 187-189, 206, 210, 214, 221, 230 I was surprised to find that the longest repeated string in the book was in the index. The same sequence of numbers are repeated for the entries for ``experiments'', ``run time'' and ``time, run''. Index Excluded expression is costly, replace it by an algebraically equivalent expression that is cheaper to evaluate. I This text appears in both the Logic Rules and the Expression Rules of Appendix 4: Rules for Code Tuning. The Iliad The Entire Text whose sake so many of the Achaeans have died at Troy, far from their homes? Go about at once among the host, and speak fairly to them, man by man, that they draw not their ships into the sea. This example (on Samuel Butler's translation of Homer's Iliad) was used in Section 15.2. describes long repeated strings in text and gives The text first occurs when Juno suggests it to Minerva as an argument that might keep the Greeks (Achaeans) from departing from Troy; it occurs shortly thereafter when Minerva repeats the argument verbatim to Ulysses. Copyright © 1999 Lucent Technologies. All rights reserved. Mon 6 Nov 2000

/Copyright (C)1999 Lucent Technologies * /From 'Programming Pearls'by Jon Bentley * /longdup.c--Print longest string duplicated M times * #include <stdlib.h> #include <string.h> #include <stdio.h> int pstrcmp(char **p,char **q) return strcmp(*p,*q);) int comlen(char *p,char *q) int i=0; while(*p&&(*p++=*g++)) i++; return i; #define M 1 #define MAXN 5000000 char c[MAXN],*a [MAXN]; int main() int i,ch,n 0,maxi,maxlen =-1; while ((ch getchar())!=EOF){ a[n]=&c[n]: c[n++]=ch: } c[n]=0: qsort(a,n,sizeof(char *)pstrcmp); for (i=0;i n-M;i++) if (comlen(a[i],a[i+M])>maxlen){ maxlen comlen(a[i],a [i+M]); maxi i; printf (".*s\n",maxlen,a [maxi]); return 0;

/* Copyright (C) 1999 Lucent Technologies */ /* From 'Programming Pearls' by Jon Bentley */ /* longdup.c -- Print longest string duplicated M times */ #include <stdlib.h> #include <string.h> #include <stdio.h> int pstrcmp(char **p, char **q) { return strcmp(*p, *q); } int comlen(char *p, char *q) { int i = 0; while (*p && (*p++ == *q++)) i++; return i; } #define M 1 #define MAXN 5000000 char c[MAXN], *a[MAXN]; int main() { int i, ch, n = 0, maxi, maxlen = -1; while ((ch = getchar()) != EOF) { a[n] = &c[n]; c[n++] = ch; } c[n] = 0; qsort(a, n, sizeof(char *), pstrcmp); for (i = 0; i < n-M; i++) if (comlen(a[i], a[i+M]) > maxlen) { maxlen = comlen(a[i], a[i+M]); maxi = i; } printf("%.*s\n", maxlen, a[maxi]); return 0; }

Generating Text (Section 15.3 of Programming Programming Pearls) How can you generate random text?A classic approach is to let loose that poor monkey on his aging typewriter.If the beast is equally likely to hit any lower case letter or the space bar,the output might look like this: Second Edition uzlpcbizdmddk njsdzyyvfgxbgijgbtsak rqvpgnsbyputvqqdtmgltz ynqotqigexjumqphujcfwn ll jiexpyqzgsdllgcoluphl sefsrvqqytjakmav bfusvirsjl wprwqt Jon Bentley This is pretty unconvincing English text. If you count the letters in word games(like Scrabble or Boggle),you will notice that there are different numbers of the various letters.There are many more A's,for instance,than there are Z's.A monkey could produce more convincing text by counting the letters in a document--if A occurs 300 times in the text while B occurs just 100 times,then the monkey should be 3 times more likely to type an A than a B.This takes us a small step closer to English: saade ve mw hc n entt da k eethetocusosselalwo gx fgrsnoh,tvettaf aetnlbilo fc lhd okleutsndyeoshtbogo eet ib nheaoopefni ngent Most events occur in context.Suppose that we wanted to generate randomly a year's worth of Fahrenheit temperature data.A series of 365 random integers between 0 and 100 wouldn't fool the average observer.We could be more convincing by making today's temperature a(random)function of yesterday's temperature:if it is 85 degrees today,it is unlikely to be 15 degrees tomorrow. The same is true of English words:if this letter is a Q,then the next letter is quite likely to be a U.A generator can make more interesting text by making each letter a random function of its predecessor.We could,therefore,read a sample text and count how many times every letter follows an A,how many times they follow a B,and so on for each letter of the alphabet.When we write the random text,we produce the next letter as a random function of the current letter.The 'order-1"text was made by exactly this scheme: Order-1:tI amy,vin.id wht omanly heay atuss n macon aresethe hired boutwhe t,tl,ad torurest t plur I wit hengamind tarer-plarody thishand. Order-2:Ther I the heingoind of-pleat,blur it dwere wing waske hat trooss.Yout lar on wassing,an sit." "Yould,""I that vide was nots ther. Order-3:I has them the saw the secorrow.And wintails on my my ent,thinks,fore voyager lanated the been elsed helder was of him a very free bottlemarkable

Generating Text (Section 15.3 of Programming Pearls) How can you generate random text? A classic approach is to let loose that poor monkey on his aging typewriter. If the beast is equally likely to hit any lower case letter or the space bar, the output might look like this: uzlpcbizdmddk njsdzyyvfgxbgjjgbtsak rqvpgnsbyputvqqdtmgltz ynqotqigexjumqphujcfwn ll jiexpyqzgsdllgcoluphl sefsrvqqytjakmav bfusvirsjl wprwqt This is pretty unconvincing English text. If you count the letters in word games (like Scrabble or Boggle), you will notice that there are different numbers of the various letters. There are many more A's, for instance, than there are Z's. A monkey could produce more convincing text by counting the letters in a document -- if A occurs 300 times in the text while B occurs just 100 times, then the monkey should be 3 times more likely to type an A than a B. This takes us a small step closer to English: saade ve mw hc n entt da k eethetocusosselalwo gx fgrsnoh,tvettaf aetnlbilo fc lhd okleutsndyeoshtbogo eet ib nheaoopefni ngent Most events occur in context. Suppose that we wanted to generate randomly a year's worth of Fahrenheit temperature data. A series of 365 random integers between 0 and 100 wouldn't fool the average observer. We could be more convincing by making today's temperature a (random) function of yesterday's temperature: if it is 85 degrees today, it is unlikely to be 15 degrees tomorrow. The same is true of English words: if this letter is a Q, then the next letter is quite likely to be a U. A generator can make more interesting text by making each letter a random function of its predecessor. We could, therefore, read a sample text and count how many times every letter follows an A, how many times they follow a B, and so on for each letter of the alphabet. When we write the random text, we produce the next letter as a random function of the current letter. The ``order-1'' text was made by exactly this scheme: Order-1: t I amy, vin. id wht omanly heay atuss n macon aresethe hired boutwhe t, tl, ad torurest t plur I wit hengamind tarer-plarody thishand. Order-2: Ther I the heingoind of-pleat, blur it dwere wing waske hat trooss. Yout lar on wassing, an sit." "Yould," "I that vide was nots ther. Order-3: I has them the saw the secorrow. And wintails on my my ent, thinks, fore voyager lanated the been elsed helder was of him a very free bottlemarkable

Order-4:His heard.""Exactly he very glad trouble,and by Hopkins!That it on of the who difficentralia. He rushed likely?""Blood night that. [Click for more examples of letter-level Markov text] We can extend this idea to longer sequences of letters.The order-2 text was made by generating each letter as a function of the two letters preceding it(a letter pair is often called a digram).The digram TH,for instance,is often followed in English by the vowels A,E,I,O,U and Y,less frequently by R and W,and rarely by other letters.The order-3 text is built by choosing the next letter as a function of the three previous letters(a trigram).By the time we get to the order-4 text,most words are English,and you might not be surprised to learn that it was generated from a Sherlock Holmes story (''The Adventure of Abbey Grange").A classically educated reader of a draft of this column commented that this sequence of fragments reminded him of the evolution from Old English to Victorian English. Readers with a mathematical background might recognize this process as a Markov chain.One state represents each k-gram,and the odds of going from one to another don't change,so this is a'finite-state Markov chain with stationary transition probabilities". We can also generate random text at the word level.The dumbest approach is to spew forth the words in a dictionary at random.A slightly better approach reads a document,counts each word,and then selects the next word to be printed with the appropriate probability.(The programs in Section 15.1 use tools appropriate for such tasks.)We can get more interesting text,though,by using Markov chains that take into account a few preceding words as they generate the next word.Here is some random text produced after reading a draft of the first 14 columns of this book Order-1:The table shows how many contexts;it uses two or equal to the sparse matrices were not chosen. In Section 13.1,for a more efficient that'the more time was published by calling recursive structure translates to build scaffolding to try to know of selected and testing and more robust and a binary search). Order-2:The program is guided by verification ideas,and the second errs in the STL implementation (which guarantees good worst-case performance),and is especially rich in speedups due to Gordon Bell. Everything should be to use a macro:for n=10,000,its run time;that point Martin picked up from his desk Order-3:A Quicksort would be quite efficient for the main-memory sorts,and it requires only a few distinct values in this particular problem,we can write them all down in the program,and they were making progress towards a solution at a snail's pace. The order-1 text is almost readable aloud,while the order-3 text consists of very long phrases from the original input,with random transitions between them.For purposes of parody,order-2 text is usually juiciest. [Click for more examples of word-level Markov text] I first saw letter-level and word-level order-k approximations to English text in Shannon's 1948 classic Mathematical Theory of Communication.Shannon writes,'To construct [order-1 letter-level text]for example, one opens a book at random and selects a letter at random on the page.This letter is recorded.The book is then opened to another page and one reads until this letter is encountered.The succeeding letter is then recorded. Turning to another page this second letter is searched for and the succeeding letter recorded,etc.A similar process was used for [order-1 and order-2 letter-level text,and order-0 and order-1 word-level text].It would be interesting

Order-4: His heard." "Exactly he very glad trouble, and by Hopkins! That it on of the who difficentralia. He rushed likely?" "Blood night that. [Click for more examples of letter-level Markov text] We can extend this idea to longer sequences of letters. The order-2 text was made by generating each letter as a function of the two letters preceding it (a letter pair is often called a digram). The digram TH, for instance, is often followed in English by the vowels A, E, I, O, U and Y, less frequently by R and W, and rarely by other letters. The order-3 text is built by choosing the next letter as a function of the three previous letters (a trigram). By the time we get to the order-4 text, most words are English, and you might not be surprised to learn that it was generated from a Sherlock Holmes story (``The Adventure of Abbey Grange''). A classically educated reader of a draft of this column commented that this sequence of fragments reminded him of the evolution from Old English to Victorian English. Readers with a mathematical background might recognize this process as a Markov chain. One state represents each k-gram, and the odds of going from one to another don't change, so this is a ``finite-state Markov chain with stationary transition probabilities''. We can also generate random text at the word level. The dumbest approach is to spew forth the words in a dictionary at random. A slightly better approach reads a document, counts each word, and then selects the next word to be printed with the appropriate probability. (The programs in Section 15.1 use tools appropriate for such tasks.) We can get more interesting text, though, by using Markov chains that take into account a few preceding words as they generate the next word. Here is some random text produced after reading a draft of the first 14 columns of this book: Order-1: The table shows how many contexts; it uses two or equal to the sparse matrices were not chosen. In Section 13.1, for a more efficient that ``the more time was published by calling recursive structure translates to build scaffolding to try to know of selected and testing and more robust and a binary search). Order-2: The program is guided by verification ideas, and the second errs in the STL implementation (which guarantees good worst-case performance), and is especially rich in speedups due to Gordon Bell. Everything should be to use a macro: for n=10,000, its run time; that point Martin picked up from his desk Order-3: A Quicksort would be quite efficient for the main-memory sorts, and it requires only a few distinct values in this particular problem, we can write them all down in the program, and they were making progress towards a solution at a snail's pace. The order-1 text is almost readable aloud, while the order-3 text consists of very long phrases from the original input, with random transitions between them. For purposes of parody, order-2 text is usually juiciest. [Click for more examples of word-level Markov text] I first saw letter-level and word-level order-k approximations to English text in Shannon's 1948 classic Mathematical Theory of Communication. Shannon writes, ``To construct [order-1 letter-level text] for example, one opens a book at random and selects a letter at random on the page. This letter is recorded. The book is then opened to another page and one reads until this letter is encountered. The succeeding letter is then recorded. Turning to another page this second letter is searched for and the succeeding letter recorded, etc. A similar process was used for [order-1 and order-2 letter-level text, and order-0 and order-1 word-level text]. It would be interesting