Words (Section 15.1 of Programming Programming Pearls) Our first problem is to produce a list of the words contained in a document.(Feed such a program a few hundred books,and you have a fine start at a word list for a dictionary.)But what exactly is a word?We'll use the trivial definition of a sequence of Second Edition characters surrounded by white space,but this means that web pages will contain manywords"like<html>",<body>"and ".Problem 1 asks how you might avoid such problems. Jon Bentley Our first C++program uses the sets and strings of the Standard Template Library,in a slight modification of the program in Solution 1.1: int main(void) set S; set::iterator j; string ti while (cin >t) S.insert(t); for (j=S.begin();j!=S.end();++j) cout<<*j<<"\n"; return 0; The while loop reads the input and inserts each word into the set S(by the STL specification,duplicates are ignored).The for loop then iterates through the set,and writes the words in sorted order.This program is elegant and fairly efficient(more on that topic soon). Our next problem is to count the number of times each word occurs in the document.Here are the 21 most common words in the King James Bible,sorted in decreasing numeric order and aligned in three columns to save space: the 62053 sha119756 they 6890 and 38546 he 9506 be 6672 of 34375 unto 8929 is 6595 to 13352 I 8699 with 5949 And12734 his 8352 not 5840 that 12428 7940 a115238 in12154 for 7139 thou 4629 Almost eight percent of the 789,616 words in the text were the word'the"(as opposed to 16 percent of the words in this sentence).By our definition of word,'and"and'And"have two separate counts

Words (Section 15.1 of Programming Pearls) Our first problem is to produce a list of the words contained in a document. (Feed such a program a few hundred books, and you have a fine start at a word list for a dictionary.) But what exactly is a word? We'll use the trivial definition of a sequence of characters surrounded by white space, but this means that web pages will contain many ``words'' like ``<html>'', ``<body>'' and `` ''. Problem 1 asks how you might avoid such problems. Our first C++ program uses the sets and strings of the Standard Template Library, in a slight modification of the program in Solution 1.1: int main(void) { set S; set::iterator j; string t; while (cin >> t) S.insert(t); for (j = S.begin(); j != S.end(); ++j) cout << *j << "\n"; return 0; } The while loop reads the input and inserts each word into the set S (by the STL specification, duplicates are ignored). The for loop then iterates through the set, and writes the words in sorted order. This program is elegant and fairly efficient (more on that topic soon). Our next problem is to count the number of times each word occurs in the document. Here are the 21 most common words in the King James Bible, sorted in decreasing numeric order and aligned in three columns to save space: the 62053 shall 9756 they 6890 and 38546 he 9506 be 6672 of 34375 unto 8929 is 6595 to 13352 I 8699 with 5949 And 12734 his 8352 not 5840 that 12428 a 7940 all 5238 in 12154 for 7139 thou 4629 Almost eight percent of the 789,616 words in the text were the word ``the'' (as opposed to 16 percent of the words in this sentence). By our definition of word, ``and'' and ``And'' have two separate counts

[Click for more examples of word frequency counts. These counts were produced by the following C++program,which uses the Standard Template Library map to associate an integer count with each string: int main(void) map M; map:iteratorj; string t; while (cin >t) M[t]++; for (j=M.begin();!M.end();++j) cout <j->first <""<j->second <"\n"; return 0; The while statement inserts each word t into the map Mand increments the associated counter (which is initialized to zero at initialization).The for statement iterates through the words in sorted order and prints each word (first) and its count (second). This C++code is straightforward,succinct and surprisingly fast.On my machine,it takes 7.6 seconds to process the Bible.About 2.4 seconds go to reading,4.9 seconds to the insertions,and 0.3 seconds to writing the ouput. We can reduce the processing time by building our own hash table,using nodes that contain a pointer to a word,a count of how often the word has been seen,and a pointer to the next node in the table.Here is the hash table after inserting the strings'in",the"and''in",in the unlikely event that both strings hash to 1: bin 0 count word next the in We'll implement the hash table with this C structure: typedef struct node *nodeptr; typedef struct node char *word; int count; nodeptr next; node; Even by our loose definition of''word",the Bible has only 29,131 distinct words.We'll follow the old lore of using a prime number near that for our hash table size,and the popular multiplier of 31: #define NHASH 29989 #define MULT 31

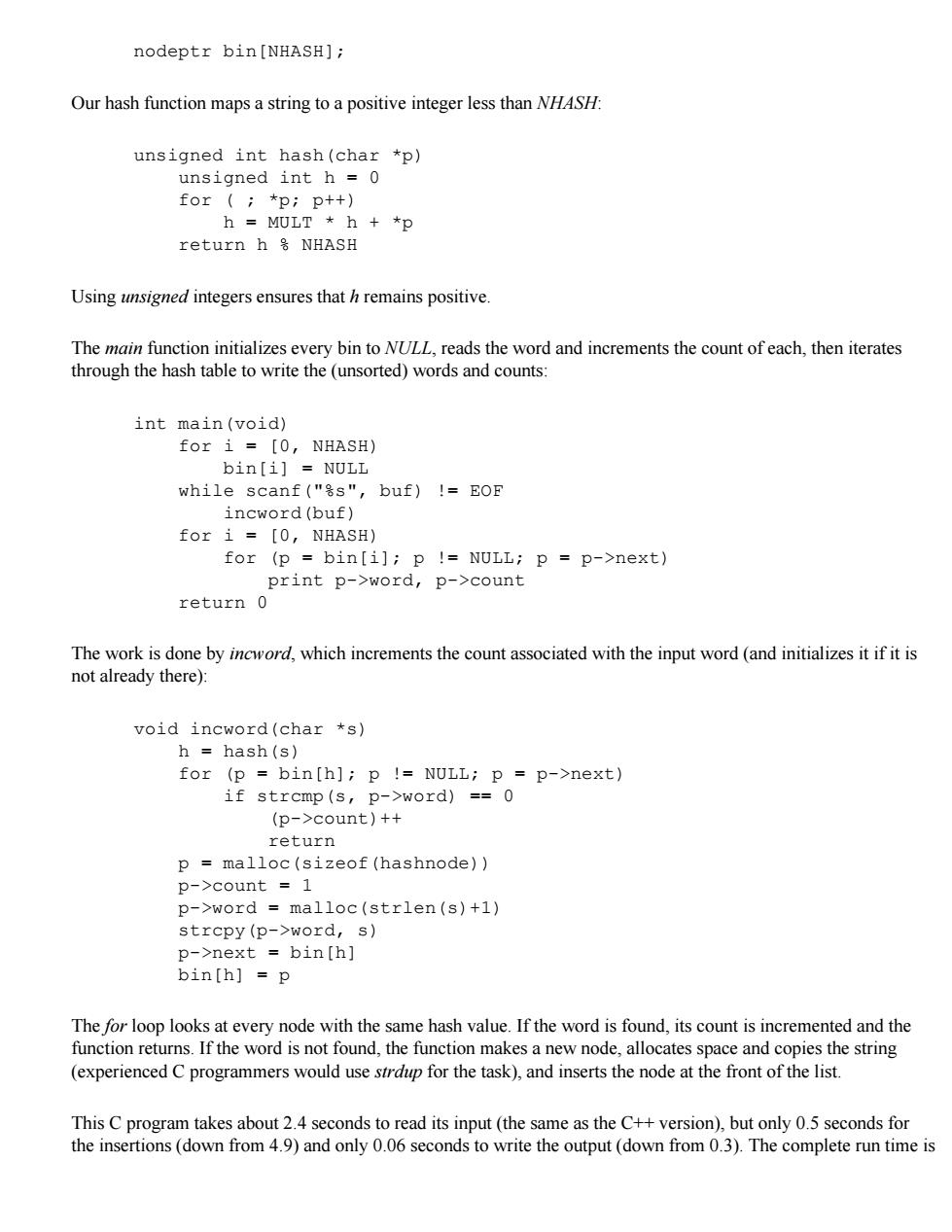

[Click for more examples of word frequency counts.] These counts were produced by the following C++ program, which uses the Standard Template Library map to associate an integer count with each string: int main(void) { map M; map::iterator j; string t; while (cin >> t) M[t]++; for (j = M.begin(); j != M.end(); ++j) cout << j->first << " " << j->second << "\n"; return 0; } The while statement inserts each word t into the map M and increments the associated counter (which is initialized to zero at initialization). The for statement iterates through the words in sorted order and prints each word (first) and its count (second). This C++ code is straightforward, succinct and surprisingly fast. On my machine, it takes 7.6 seconds to process the Bible. About 2.4 seconds go to reading, 4.9 seconds to the insertions, and 0.3 seconds to writing the ouput. We can reduce the processing time by building our own hash table, using nodes that contain a pointer to a word, a count of how often the word has been seen, and a pointer to the next node in the table. Here is the hash table after inserting the strings ``in'', ``the'' and ``in'', in the unlikely event that both strings hash to 1: We'll implement the hash table with this C structure: typedef struct node *nodeptr; typedef struct node { char *word; int count; nodeptr next; } node; Even by our loose definition of ``word'', the Bible has only 29,131 distinct words. We'll follow the old lore of using a prime number near that for our hash table size, and the popular multiplier of 31: #define NHASH 29989 #define MULT 31

nodeptr bin [NHASH]; Our hash function maps a string to a positive integer less than NHASH: unsigned int hash(char *p) unsigned int h =0 for(;*p;p++) h MULT h *p return h号NHASH Using unsigned integers ensures that h remains positive. The main function initializes every bin to NULL,reads the word and increments the count of each,then iterates through the hash table to write the (unsorted)words and counts: int main(void) for i=[0,NHASH) bin[i]NULL while scanf("号s",buf)!=EoF incword(buf) for i=[0,NHASH) for (p bin[i];p !NULL;p p->next) print p->word,p->count return 0 The work is done by incword,which increments the count associated with the input word(and initializes it if it is not already there): void incword(char *s) h hash(s) for (p bin[h];p !NULL;p p->next) if strcmp(s,p->word)==0 (p->count)++ return p malloc(sizeof(hashnode)) p->count 1 p->word malloc(strlen(s)+1) strcpy(p->word,s) p->next bin[h] bin[h]p The for loop looks at every node with the same hash value.If the word is found,its count is incremented and the function returns.If the word is not found,the function makes a new node,allocates space and copies the string (experienced C programmers would use strdup for the task),and inserts the node at the front of the list. This C program takes about 2.4 seconds to read its input (the same as the C++version),but only 0.5 seconds for the insertions(down from 4.9)and only 0.06 seconds to write the output (down from 0.3).The complete run time is

nodeptr bin[NHASH]; Our hash function maps a string to a positive integer less than NHASH: unsigned int hash(char *p) unsigned int h = 0 for ( ; *p; p++) h = MULT * h + *p return h % NHASH Using unsigned integers ensures that h remains positive. The main function initializes every bin to NULL, reads the word and increments the count of each, then iterates through the hash table to write the (unsorted) words and counts: int main(void) for i = [0, NHASH) bin[i] = NULL while scanf("%s", buf) != EOF incword(buf) for i = [0, NHASH) for (p = bin[i]; p != NULL; p = p->next) print p->word, p->count return 0 The work is done by incword, which increments the count associated with the input word (and initializes it if it is not already there): void incword(char *s) h = hash(s) for (p = bin[h]; p != NULL; p = p->next) if strcmp(s, p->word) == 0 (p->count)++ return p = malloc(sizeof(hashnode)) p->count = 1 p->word = malloc(strlen(s)+1) strcpy(p->word, s) p->next = bin[h] bin[h] = p The for loop looks at every node with the same hash value. If the word is found, its count is incremented and the function returns. If the word is not found, the function makes a new node, allocates space and copies the string (experienced C programmers would use strdup for the task), and inserts the node at the front of the list. This C program takes about 2.4 seconds to read its input (the same as the C++ version), but only 0.5 seconds for the insertions (down from 4.9) and only 0.06 seconds to write the output (down from 0.3). The complete run time is

3.0 seconds(down from 7.6),and the processing time is 0.55 seconds(down from 5.2).Our custom-made hash table(in 30 lines of C)is an order of magnitude faster than the maps from the C++Standard Template Library. This little exercise illustrates the two main ways to represent sets of words.Balanced search trees operate on strings as indivisible objects;these structures are used in most implementations of the STL's sets and maps.They always keep the elements in sorted order,so they can efficiently perform operations such as finding a predecessor or reporting the elements in order.Hashing,on the other hand,peeks inside the characters to compute a hash function, and then scatters keys across a big table.It is very fast on the average,but it does not offer the worst-case performance guarantees of balanced trees,or support other operations involving order. Next:Section 15.2.Phrases. Copyright 1999 Lucent Technologies.All rights reserved.Wed 18 Oct 2000

3.0 seconds (down from 7.6), and the processing time is 0.55 seconds (down from 5.2). Our custom-made hash table (in 30 lines of C) is an order of magnitude faster than the maps from the C++ Standard Template Library. This little exercise illustrates the two main ways to represent sets of words. Balanced search trees operate on strings as indivisible objects; these structures are used in most implementations of the STL's sets and maps. They always keep the elements in sorted order, so they can efficiently perform operations such as finding a predecessor or reporting the elements in order. Hashing, on the other hand, peeks inside the characters to compute a hash function, and then scatters keys across a big table. It is very fast on the average, but it does not offer the worst-case performance guarantees of balanced trees, or support other operations involving order. Next: Section 15.2. Phrases. Copyright © 1999 Lucent Technologies. All rights reserved. Wed 18 Oct 2000

Problems (Section 15.5 of Programming Pearls) The Solutions to Column 15 give answers for some of these problems. 1.Throughout this column we have used the simple definition Second Edition that words are separated by white space.Many real documents, such as those in HTML or RTF,contain formatting commands. How could you deal with such commands?Are there any other tasks that you might need to perform? Jon Bentley 2.On a machine with ample main memory,how could you use the C++STL sets or maps to solve the searching problem in Section 13.8?How much memory does it consume compared to Mcllroy's structure? 3.How much speedup can you achieve by incorporating the special-purpose malloc of Solution 9.2 into the hashing program of Section 15.1? 4.When a hash table is large and the hash function scatters the data well,almost every list in the table has only a few elements.If either of these conditions is violated,though,the time spent searching down the list can be substantial.When a new string is not found in the hash table in Section 15.1,it is placed at the front of the list.To simulate hashing trouble,set NHASH to 1 and experiment with this and other list strategies,such as appending to the end of the list,or moving the most recently found element to the front of the list. 5.When we looked at the output of the word frequency programs in Section 15.1,it was most interesting to print the words in decreasing frequency.How would you modify the C and C++programs to accomplish this task?How would you print only the Mmost common words,where Mis a constant such as 10 or 1000? 6.Given a new input string,how would you search a suffix array to find the longest match in the stored text?How would you build a GUI interface for this task? 7.Our program for finding duplicated strings was very fast for'typical"inputs,but it can be very slow(greater than quadratic)for some inputs.Time the program on such an input.Do such inputs ever arise in practice? 8.How would you modify the program for finding duplicated strings to find the longest string that occurs more than Mtimes? 9.Given two input texts,find the longest string that occurs in both. 10.Show how to reduce the number of pointers in the duplication program by pointing only to suffixes that start on word boundaries.What effect does this have on the output produced by the program?

Problems (Section 15.5 of Programming Pearls) The Solutions to Column 15 give answers for some of these problems. 1. Throughout this column we have used the simple definition that words are separated by white space. Many real documents, such as those in HTML or RTF, contain formatting commands. How could you deal with such commands? Are there any other tasks that you might need to perform? 2. On a machine with ample main memory, how could you use the C++ STL sets or maps to solve the searching problem in Section 13.8? How much memory does it consume compared to McIlroy's structure? 3. How much speedup can you achieve by incorporating the special-purpose malloc of Solution 9.2 into the hashing program of Section 15.1? 4. When a hash table is large and the hash function scatters the data well, almost every list in the table has only a few elements. If either of these conditions is violated, though, the time spent searching down the list can be substantial. When a new string is not found in the hash table in Section 15.1, it is placed at the front of the list. To simulate hashing trouble, set NHASH to 1 and experiment with this and other list strategies, such as appending to the end of the list, or moving the most recently found element to the front of the list. 5. When we looked at the output of the word frequency programs in Section 15.1, it was most interesting to print the words in decreasing frequency. How would you modify the C and C++ programs to accomplish this task? How would you print only the M most common words, where M is a constant such as 10 or 1000? 6. Given a new input string, how would you search a suffix array to find the longest match in the stored text? How would you build a GUI interface for this task? 7. Our program for finding duplicated strings was very fast for ``typical'' inputs, but it can be very slow (greater than quadratic) for some inputs. Time the program on such an input. Do such inputs ever arise in practice? 8. How would you modify the program for finding duplicated strings to find the longest string that occurs more than M times? 9. Given two input texts, find the longest string that occurs in both. 10. Show how to reduce the number of pointers in the duplication program by pointing only to suffixes that start on word boundaries. What effect does this have on the output produced by the program?