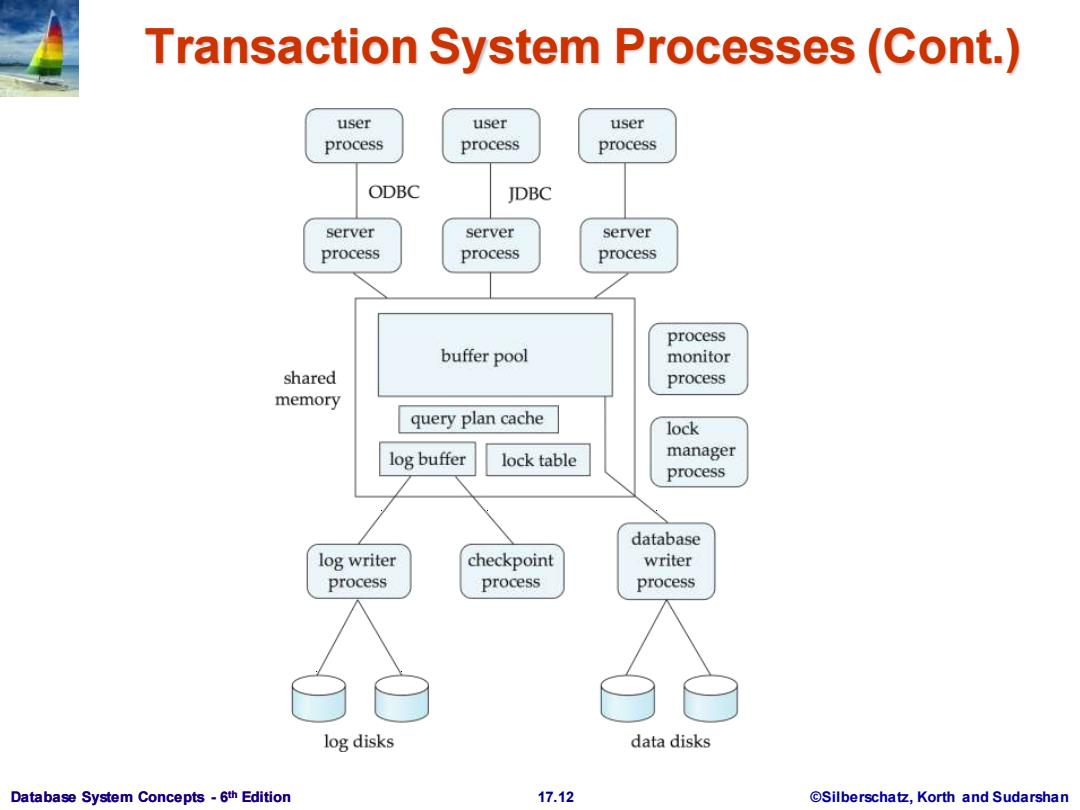

Transaction System Processes(Cont.) user user user process process process ODBC JDBC server server server process process process process buffer pool monitor shared process memory query plan cache lock log buffer lock table manager process database log writer checkpoint writer process process process log disks data disks Database System Concepts-6th Edition 17.12 @Silberschatz,Korth and Sudarshan

Database System Concepts - 6 17.12 ©Silberschatz, Korth and Sudarshan th Edition Transaction System Processes (Cont.)

Transaction System Processes(Cont.) Shared memory contains shared data Buffer pool Lock table Log buffer Cached query plans(reused if same query submitted again) All database processes can access shared memory To ensure that no two processes are accessing the same data structure at the same time,databases systems implement mutual exclusion using either Operating system semaphores Atomic instructions such as test-and-set To avoid overhead of interprocess communication for lock request/grant,each database process operates directly on the lock table instead of sending requests to lock manager process Lock manager process still used for deadlock detection Database System Concepts-6th Edition 17.13 @Silberschatz,Korth and Sudarshan

Database System Concepts - 6 17.13 ©Silberschatz, Korth and Sudarshan th Edition Transaction System Processes (Cont.) Shared memory contains shared data Buffer pool Lock table Log buffer Cached query plans (reused if same query submitted again) All database processes can access shared memory To ensure that no two processes are accessing the same data structure at the same time, databases systems implement mutual exclusion using either Operating system semaphores Atomic instructions such as test-and-set To avoid overhead of interprocess communication for lock request/grant, each database process operates directly on the lock table instead of sending requests to lock manager process Lock manager process still used for deadlock detection

Data Servers Used in high-speed LANs,in cases where The clients are comparable in processing power to the server The tasks to be executed are compute intensive. Data are shipped to clients where processing is performed,and then shipped results back to the server. This architecture requires full back-end functionality at the clients Used in many object-oriented database systems Issues: Page-Shipping versus Item-Shipping Locking Data Caching Lock Caching Database System Concepts-6th Edition 17.14 @Silberschatz,Korth and Sudarshan

Database System Concepts - 6 17.14 ©Silberschatz, Korth and Sudarshan th Edition Data Servers Used in high-speed LANs, in cases where The clients are comparable in processing power to the server The tasks to be executed are compute intensive. Data are shipped to clients where processing is performed, and then shipped results back to the server. This architecture requires full back-end functionality at the clients. Used in many object-oriented database systems Issues: Page-Shipping versus Item-Shipping Locking Data Caching Lock Caching

Data Servers (Cont.) Page-shipping versus item-shipping Smaller unit of shipping=more messages Worth prefetching related items along with requested item Page shipping can be thought of as a form of prefetching Locking Overhead of requesting and getting locks from server is high due to message delays Can grant locks on requested and prefetched items;with page shipping,transaction is granted lock on whole page. Locks on a prefetched item can be Pfcalled back}by the server, and returned by client transaction if the prefetched item has not been used. Locks on the page can be deescalated to locks on items in the page when there are lock conflicts.Locks on unused items can then be returned to server. Database System Concepts-6th Edition 17.15 @Silberschatz,Korth and Sudarshan

Database System Concepts - 6 17.15 ©Silberschatz, Korth and Sudarshan th Edition Data Servers (Cont.) Page-shipping versus item-shipping Smaller unit of shipping more messages Worth prefetching related items along with requested item Page shipping can be thought of as a form of prefetching Locking Overhead of requesting and getting locks from server is high due to message delays Can grant locks on requested and prefetched items; with page shipping, transaction is granted lock on whole page. Locks on a prefetched item can be P{called back} by the server, and returned by client transaction if the prefetched item has not been used. Locks on the page can be deescalated to locks on items in the page when there are lock conflicts. Locks on unused items can then be returned to server

Data Servers(Cont.) Data Caching Data can be cached at client even in between transactions But check that data is up-to-date before it is used (cache coherency) Check can be done when requesting lock on data item Lock Caching Locks can be retained by client system even in between transactions Transactions can acquire cached locks locally,without contacting server Server calls back locks from clients when it receives conflicting lock request.Client returns lock once no local transaction is using it. Similar to deescalation.but across transactions. Database System Concepts-6th Edition 17.16 @Silberschatz,Korth and Sudarshan

Database System Concepts - 6 17.16 ©Silberschatz, Korth and Sudarshan th Edition Data Servers (Cont.) Data Caching Data can be cached at client even in between transactions But check that data is up-to-date before it is used (cache coherency) Check can be done when requesting lock on data item Lock Caching Locks can be retained by client system even in between transactions Transactions can acquire cached locks locally, without contacting server Server calls back locks from clients when it receives conflicting lock request. Client returns lock once no local transaction is using it. Similar to deescalation, but across transactions