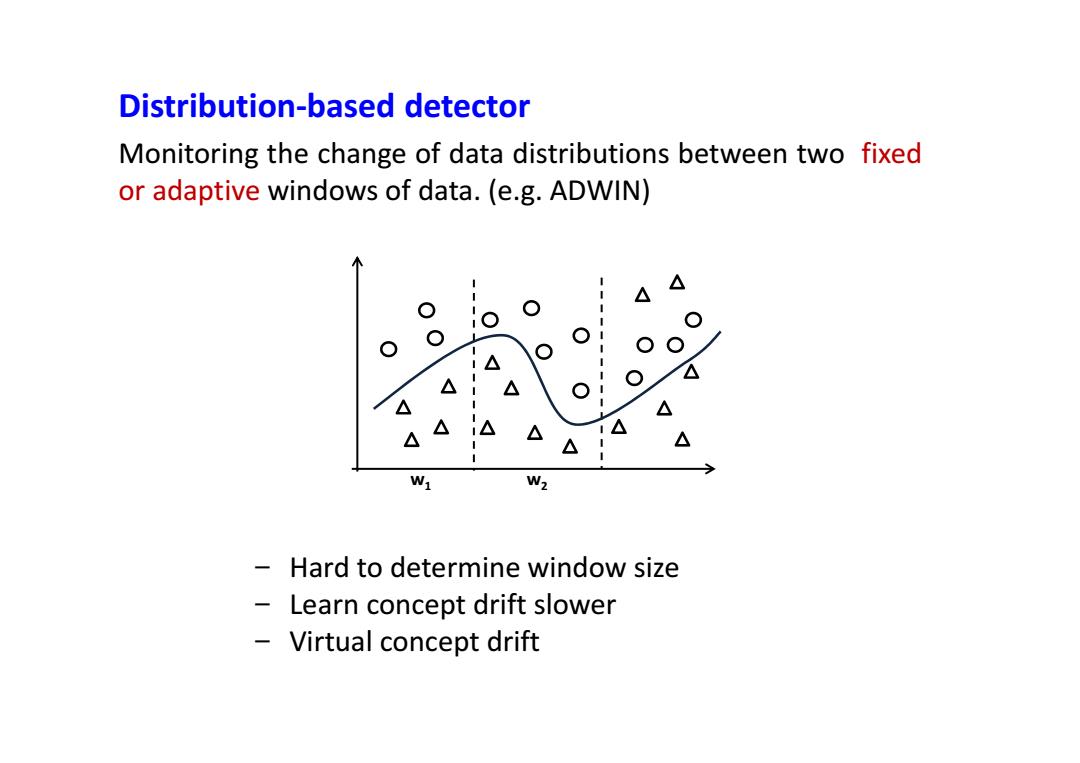

Distribution-based detector Monitoring the change of data distributions between two fixed or adaptive windows of data.(e.g.ADWIN) 0 W1 W, Hard to determine window size Learn concept drift slower Virtual concept drift

Distribution-based detector Monitoring the change of data distributions between two fixed or adaptive windows of data. (e.g. ADWIN) − Hard to determine window size − Learn concept drift slower − Virtual concept drift w1 w2

Adaptive Windowing (ADWIN) The idea is simple:whenever two "large enough"subwindows of W exhibit "distinct enough"averages,one can conclude that the corresponding expected values are different,and the older portion of the window is dropped. begi Initialize Window W; foreach (t)>0 do WWUfxt}(i.e.,add xt to the head of W); repeat Drop elements from W until lpwo-aw<ceut holds for every split of W into W=Wo UWi; end Output Aw end

Adaptive Windowing(ADWIN) The idea is simple: whenever two “large enough” subwindows of W exhibit “distinct enough” averages, one can conclude that the corresponding expected values are different,and the older portion of the window is dropped

Error-rate based detector Capture concept drift based on the change of the classification performance. (i.e.comparing the current classification performance to the average historical error rate with statistical analysis.)(e.g.PHT) L△ Infer time-changing concept relying on performance degradation △ △ △ Indirectly Sensitive to noise Hard to deal with gradual concept drift Depend on learning model itself heavily

Error-rate based detector Capture concept drift based on the change of the classification performance. (i.e. comparing the current classification performance to the average historical error rate with statistical analysis.) (e.g. PHT) − Sensitive to noise − Hard to deal with gradual concept drift − Depend on learning model itself heavily Infer time-changing concept relying on performance degradation Indirectly

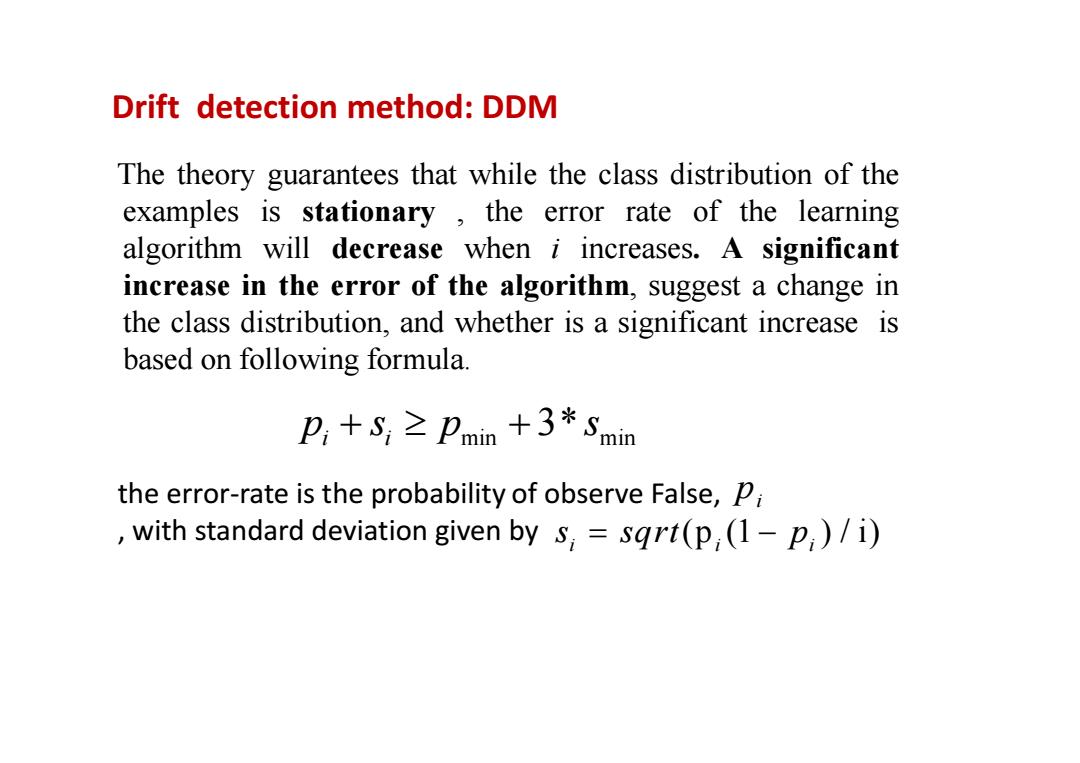

Drift detection method:DDM The theory guarantees that while the class distribution of the examples is stationary the error rate of the learning algorithm will decrease when i increases.A significant increase in the error of the algorithm,suggest a change in the class distribution,and whether is a significant increase is based on following formula. p,+S,≥Pmin+3*Smin the error-rate is the probability of observe False,Pi with standard deviation given by s,sgrt(p (1-p)/i)

Drift detection method: DDM The theory guarantees that while the class distribution of the examples is stationary , the error rate of the learning algorithm will decrease when i increases. A significant increase in the error of the algorithm, suggest a change in the class distribution, and whether is a significant increase is based on following formula. min min 3* i i p s p s i the error-rate is the probability of observe False, p , with standard deviation given by (p (1 ) / i) i i i s sqrt p

2,Data Stream Classification

2、Data Stream Classification